南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 09 Optimistic Online Mirror Descent - optimistic online learning, predictable sequence, small-loss bound, gradient-variance bound, gradient-variation bound

NJUAT 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 9.Optimistic Online Mirror Descent Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 9. Optimistic Online Mirror Descent Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Beyond the Worst-Case Analysis All above regret guarantees hold against the worst case Matching the minimax optimality oblivious adversary adaptive adversary The environment is fully adversarial examination interview However,in practice: We are not always interested in the worst-case scenario Environments can exhibit specific patterns:gradual change,periodicity... We are after some more problem-dependent guarantees. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 2

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 2 Beyond the Worst-Case Analysis • All above regret guarantees hold against the worst case • Matching the minimax optimality • The environment is fully adversarial interview oblivious adversary adaptive adversary examination We are after some more problem-dependent guarantees. • However, in practice: • We are not always interested in the worst-case scenario • Environments can exhibit specific patterns: gradual change, periodicity…

Beyond the Worst-Case Analysis Beyond the worst-case analysis,achieving more adaptive results. (1)adaptivity:achieving better guarantees in easy problem instances; (2)robustness:maintaining the same worst-case guarantee. VS OPTIMISTS PESSIMISTS Be cautiously optimistic Real world Easy Data Worst-Case Data [Slides from Dylan Foster,Adaptive Online Learning @NIPS'15 workshop] Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 3

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 3 Beyond the Worst-Case Analysis • Beyond the worst-case analysis, achieving more adaptive results. (1) adaptivity: achieving better guarantees in easy problem instances; (2) robustness: maintaining the same worst-case guarantee. [Slides from Dylan Foster, Adaptive Online Learning @NIPS’15 workshop]

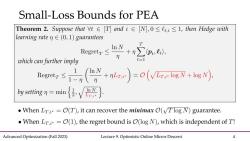

Small-Loss Bounds for PEA Theorem2.Suppose that∀t∈[T]andi∈[N],0≤Lt,i≤l,then Hedge with learning rate nE(0,1)guarantees which can further imply t=1 7≤(,+)=o(vg+s by setting n=min When LT.i=O(T),it can recover the minimax O(VTlog N)guarantee. When Lr.=O(1),the regret bound is (log N),which is independent of T! Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 4

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 4 Small-Loss Bounds for PEA

Small-Loss Bounds for PEA Addressing the unpleasant dependence on Lr.ivia self-confident tuning. Theorem4.Suppose that∀t∈[T]andi∈[N],0≤,i≤l,then Hedge with adaptive learning rate gurantees Regretr <8(Lr.+1)In N+3In N =O(V,1ogN+logN), whereL is cumulative loss the learner suffered at time t. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 5

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 5 Small-Loss Bounds for PEA

Key Analysis in Self-confident Tuning Proof.From the potential-based proof,we already know that T T ∑m.-≤-1+mN+-p: t=1 ≤Vr-1+nN+∑ P,) 1=V V∑ip,)+1 (L-1-∑号p》 How to bound this term? This is actually a common structure to handle. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 6

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 6 Key Analysis in Self-confident Tuning Proof. From the potential-based proof, we already know that How to bound this term? This is actually a common structure to handle

Small-Loss Bound for PEA:Proof Proof.From previous potential-based proof,we already known that 含m-sv+m同 Pe) Lemma 2.Let a1,a2,...,ar be non-negative real numbers.Then T T te T] Lr-Lr,≤V(亿r-1+1)lnN+4y1+Lr+1 (C,≤L∈N) <V(Lr+1)In N+4v1+Lr+1 Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 7

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 7 Small-Loss Bound for PEA: Proof Proof. From previous potential-based proof, we already known that

Small-Loss Bounds for OCO Definition 4(Small Loss).The small-loss quantity of the OCO problem(online function f:R)is defined as T Fr=min x∈X fix) t=1 One essential property for small-loss bound for OCO:self-bounding property. Corollary 1.For an L-smooth and non-negative function f:RdR,we have that IVf(x)2≤V2Lf(x),x∈X. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 8

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 8 Small-Loss Bounds for OCO One essential property for small-loss bound for OCO: self-bounding property

Achieving Small-Loss Bound We show that under the self-bounding condition,OGD can yield the desired small-loss regret bound. xt+1=Πx∈xXt-:Vf(xt)J Theorem 6(Small-loss Bound).Assume that fr is L-smooth and non-negative for all t E when settingthe regret of OGD to any comparator is bounded as Regretr=∑ix)-∑fm≤o(V+同) where Gis the empirical cumulative gradient norm. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 9

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 9 Achieving Small-Loss Bound • We show that under the self-bounding condition, OGD can yield the desired small-loss regret bound

Proof Pof)-m≤ix+2品(u--u- 玄u度 +≤01+空+ (11) (G=∑1Vf(x)) Lemma 1.Let a,a2,...,ar be non-negative real numbers.Then T T -≤1+ at t=11 Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 10

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 10 Proof Proof

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 08 Adaptive Online Convex Optimization - problem-dependent guarantee, small-loss bound, self-confident tuning, small-loss OCO, self-bounding property bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 07 Online Mirror Descent - OMD framework, regret analysis, primal-dual view, mirror map, FTRL, dual averaging.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 06 Prediction with Expert Advice - Hedge, minimax bound, lower bound; mirror descent(motivation and preliminary).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 05 Online Convex Optimization - OGD, convex functions, strongly convex functions, online Newton step, exp-concave functions.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 04 GD Methods II - GD method, smooth optimization, Nesterov’s AGD, composite optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 03 GD Methods I - GD method, Lipschitz optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 02 Convex Optimization Basics; Function Properties.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 01 Introduction; Mathematical Background.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)11 图像特征分析.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)10 图像分割.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)09 形态学及其应用.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)08 压缩编码.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)07 频域滤波器.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)06 图像频域变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)05 代数运算与几何变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)04 图像复原及锐化.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)03 灰度直方图与点运算.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)02 二值图像与像素关系.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)01 概述 Digital Image Processing.pdf

- 人工智能相关文献资料:Adaptivity and Non-stationarity - Problem-dependent Dynamic Regret for Online Convex Optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 10 Online Learning in Games - two-player zero-sum games, repeated play, minimax theorem, fast convergence.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 11 Adversarial Bandits - MAB, IW estimator, Exp3, lower bound, BCO, gradient estimator, self-concordant barrier.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 12 Stochastic Bandits - MAB, UCB, linear bandits, self-normalized concentration, generalized linear bandits.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 13 Advanced Topics - non-stationary online learning, universal online learning, online ensemble, base algorithm, meta algorithm.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)课程简介 Combinatorics Introduction(主讲:尹一通).pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)基本计数 Basic enumeration.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)生成函数 Generating functions.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)筛法 Sieve methods.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Cayley公式 Cayley's formula.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Pólya计数法 Pólya's theory of counting.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Ramsey理论 Ramsey theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)存在性问题 Existence problems.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值图论 Extremal graph theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值集合论 Extremal set theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)概率法 The probabilistic method.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)匹配论 Matching theory.pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)01 基础(主讲:詹德川).pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)02 典型方法.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目一 走进互联网营销.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目二 互联网营销市场调研.pdf