南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 03 GD Methods I - GD method, Lipschitz optimization

吸鲁 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 3.Gradient Descent Method Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 3. Gradient Descent Method Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline ·Gradient Descent ·Convex and Lipschitz ·Polyak Step Size Convergence without Optimal Value Optimal Time-Varying Step Sizes Strongly Convex and Lipschitz Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 2

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 2 Outline • Gradient Descent • Convex and Lipschitz • Polyak Step Size • Convergence without Optimal Value • Optimal Time-Varying Step Sizes • Strongly Convex and Lipschitz

Part 1.Gradient Descent Convex Optimization Problem Gradient Descent ·Performance Measure The First Gradient Descent Lemma Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 3

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 3 Part 1. Gradient Descent • Convex Optimization Problem • Gradient Descent • Performance Measure • The First Gradient Descent Lemma

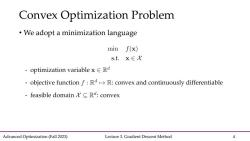

Convex Optimization Problem We adopt a minimization language min f(x) s.t.x∈X optimization variable x E Rd objective function f:RaR:convex and continuously differentiable feasible domain tC Rd:convex Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 4

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 4 Convex Optimization Problem • We adopt a minimization language

Goal To output a sequence {x}such that x approximates x*when t goes larger. ●Function-value level:f(xr)-f(x*)≤e(T) .Optimizer--value level::xr-x*l‖l≤e(T) where can be statistics of the original sequence {x and s(T)is the approximation error and is a function of iterations T. Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 5

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 5 Goal

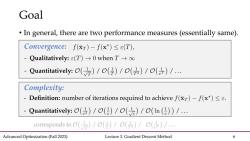

Goal In general,there are two performance measures (essentially same) Convergence:f(xr)-f(x*)<e(T), Qualitatively::e(T)→0 when T→o Quantitatively:O(元)/o()/O()/o()/.… Complexity: Definition:number of iterations required to achieve f(r)-f(x*)<s. Quantitatively:()/()/()/0(In())/... corresponds to(元)/O()/O(是)/O()/ Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 6

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 6 • In general, there are two performance measures (essentially same). Goal

Gradient Descent ·GD Template: xt+1=Πxxt-EVf(xt)] -x1 can be an arbitrary point inside the domain. -n>0 is the potentially time-varying step size (or called learning rate). -ProjectionⅡxy]=arg minx∈x‖x-y ensures the feasibility. Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 7

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 7 • GD Template: Gradient Descent

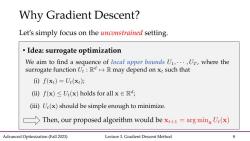

Why Gradient Descent? Let's simply focus on the unconstrained setting. Idea:surrogate optimization We aim to find a sequence of local upper bounds U1,...,Ur,where the surrogate function U::RdR may depend on xt such that ()f(x)=U(xt); (i)f(x)≤U(x)holds for all x∈Ra, (iii)U(x)should be simple enough to minimize. Then,our proposed algorithm would bex+1=arg minx U:(x) Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 8

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 8 Why Gradient Descent? Let’s simply focus on the unconstrained setting. • Idea: surrogate optimization

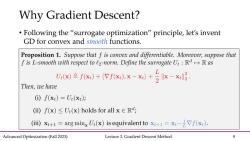

Why Gradient Descent? Following the "surrogate optimization"principle,let's invent GD for convex and smooth functions. Proposition 1.Suppose that f is convex and differentiable.Moreover,suppose that f is L-smooth with respect to l2-norm.Define the surrogate U:RRas 凶f)+fxx-x+51x-xg Then,we have (①)f(xt)=U(xt); ()f(x)≤U(x)holds for all x∈R, (iii)xt+1=arg minx Ui(x)is equivalent to x+=x-Vf(x). Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 9

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 9 Why Gradient Descent? • Following the “surrogate optimization” principle, let’s invent GD for convex and smooth functions

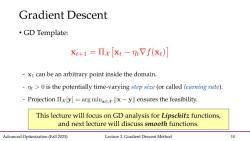

Gradient Descent ·GD Template: xt+1=Πx[xt-:Vf(xt)] -xI can be an arbitrary point inside the domain. nt>0 is the potentially time-varying step size(or called learning rate). -Projection IIy]=arg minxex-yll ensures the feasibility. This lecture will focus on GD analysis for Lipschitz functions, and next lecture will discuss smooth functions. Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 10

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 10 • GD Template: Gradient Descent This lecture will focus on GD analysis for Lipschitz functions, and next lecture will discuss smooth functions

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 02 Convex Optimization Basics; Function Properties.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 01 Introduction; Mathematical Background.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)11 图像特征分析.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)10 图像分割.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)09 形态学及其应用.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)08 压缩编码.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)07 频域滤波器.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)06 图像频域变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)05 代数运算与几何变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)04 图像复原及锐化.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)03 灰度直方图与点运算.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)02 二值图像与像素关系.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)01 概述 Digital Image Processing.pdf

- 人工智能相关文献资料:Adaptivity and Non-stationarity - Problem-dependent Dynamic Regret for Online Convex Optimization.pdf

- 北京大学出版社:21世纪全国应用型本科电子通信系列《MATLAB基础及其应用教程》实用规划教材(共八章,2007,编著:周开利等).pdf

- 《计算机应用基础》课程教学资源(参考资料)Mathematica CheatSheet.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Reference Sheet, by Giordano Fusco & Jindich Soukup.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Reference Sheet, by Sherman Wiggin & Dom Dal Bello.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Reference Card, by Jesse Knight.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Quick Reference, by Jialong He.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 04 GD Methods II - GD method, smooth optimization, Nesterov’s AGD, composite optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 05 Online Convex Optimization - OGD, convex functions, strongly convex functions, online Newton step, exp-concave functions.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 06 Prediction with Expert Advice - Hedge, minimax bound, lower bound; mirror descent(motivation and preliminary).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 07 Online Mirror Descent - OMD framework, regret analysis, primal-dual view, mirror map, FTRL, dual averaging.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 08 Adaptive Online Convex Optimization - problem-dependent guarantee, small-loss bound, self-confident tuning, small-loss OCO, self-bounding property bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 09 Optimistic Online Mirror Descent - optimistic online learning, predictable sequence, small-loss bound, gradient-variance bound, gradient-variation bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 10 Online Learning in Games - two-player zero-sum games, repeated play, minimax theorem, fast convergence.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 11 Adversarial Bandits - MAB, IW estimator, Exp3, lower bound, BCO, gradient estimator, self-concordant barrier.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 12 Stochastic Bandits - MAB, UCB, linear bandits, self-normalized concentration, generalized linear bandits.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 13 Advanced Topics - non-stationary online learning, universal online learning, online ensemble, base algorithm, meta algorithm.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)课程简介 Combinatorics Introduction(主讲:尹一通).pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)基本计数 Basic enumeration.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)生成函数 Generating functions.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)筛法 Sieve methods.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Cayley公式 Cayley's formula.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Pólya计数法 Pólya's theory of counting.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Ramsey理论 Ramsey theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)存在性问题 Existence problems.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值图论 Extremal graph theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值集合论 Extremal set theory.pdf