南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 08 Adaptive Online Convex Optimization - problem-dependent guarantee, small-loss bound, self-confident tuning, small-loss OCO, self-bounding property bound

NJUA 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 8.Adaptive Online Convex Optimization Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 8. Adaptive Online Convex Optimization Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline ·Motivation ·Small-Loss Bounds Small-Loss bound for PEA Self-confident Tuning Small-Loss bound for OCO Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 2

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 2 Outline • Motivation • Small-Loss Bounds • Small-Loss bound for PEA • Self-confident Tuning • Small-Loss bound for OCO

General Regret Analysis for OMD OMD update: =arg min n(x Vfi()D(x) x∈X Lemma 1(Mirror Descent Lemma).Let D be the Bregman divergence w.r.t.: X→R and assume少to be X-strongly convex with respect to a norm‖·‖.Then, ∀u∈X,the following inequality holds x)-im)≤最D,ux)-D,ux)+癸f --Du(xt+1,xt) bias term(range term) variance term(stability term) negative term Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 3

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 3 General Regret Analysis for OMD OMD update: bias term (range term) variance term (stability term) negative term

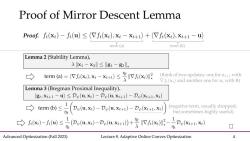

Proof of Mirror Descent Lemma Proof.fi(xt)-fi(u)<(Vfi(xt),xt-x++1)+(Vfi(xi),xi+1-u) term (a) term(b) Lemma 2(Stability Lemma). 入x1-x2‖≤g1-g2ll* tem(a)=(fx,x-x+)≤哭lVf.(x,) (think of two updates:one for x with Vfi(x)and another one for x with 0) Lemma 3(Bregman Proximal Inequality). (gi,Xi+1-u)<Du(u,xi)-Do(u,x+1)-Dv(xi+1,xi) emo)≤(aux)-nux+)-nK】 (negative term,usually dropped; but sometimes highly useful) →fx)-f四≤(D(u,x)-Deu,x+》+IVfi(xI2-D,x+1,x) Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 4

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 4 Proof of Mirror Descent Lemma Proof. term (a) term (b) (negative term, usually dropped; but sometimes highly useful)

General Analysis Framework for OMD Lemma 1 (Mirror Descent Lemma).Let Du be the Bregman divergence w.r.t.->R and assume v to be入-strongly convex with respect to a norm‖·‖.Then,∀u∈X,the following inequality holds fix)-fiu)≤是(D(u,x)-D,u,x》+IVfx Using Lemma 1,we can easily prove the following regret bound for OMD. Theorem 4(General Regret Bound for OMD).Assumeis A-strongly convex w.r.t. andt=n,∀t∈[T].Then,for all u∈X,the following regret bound holds x)-≤Dg+I T T T Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 5

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 5 General Analysis Framework for OMD Using Lemma 1, we can easily prove the following regret bound for OMD

Online Mirror Descent Our previous mentioned algorithms can all be covered by OMD. Algo. OMD/proximal form () nt Regretr OGD for X4+1=arg min%x,Vf(x》+专k-x服 lxll O(VT) convex XEX OGD for x修 O(日logT) strongly c. x+1=ar,Vfx:》+2Ix-x哈 品 x∈X ONS for exp-concave X+1=are minn,Vfix》+5x-x房 x房 17 O(号1ogT) x∈X Hedge for xt+1=arg min n (x,Vfi(xt))+KL(xx:) zi log xi In N PEA O(VTlog N) X∈△N Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 6

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 6 Online Mirror Descent • Our previous mentioned algorithms can all be covered by OMD. OGD for convex OGD for strongly c. ONS for exp-concave Hedge for PEA Algo. OMD/proximal form

Online Mirror Descent minimax Our previous mentioned algorithms can all be covere optimal Algo. OMD/proximal form () nt Regretr OGD for llxll O(VT) convex arg min(Vf() x∈X OGD for strongly c. +1=arg min me(Vf() x 品 O(号logT) ONS for exp-concave x+1=au哭πinnx,Vf(x》+Ix-x服 xIl 17 O(号1ogT) Hedge for xt+1=arg min m(x,Vfi(x))+KL(x]x) zi log i In N PEA O(VTlog N) xE△N Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 7

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 7 Online Mirror Descent • Our previous mentioned algorithms can all be covered by OMD. OGD for convex OGD for strongly c. ONS for exp-concave Hedge for PEA Algo. OMD/proximal form minimax optimal

Beyond the Worst-Case Analysis All above regret guarantees hold against the worst case Matching the minimax optimality oblivious adversary adaptive adversary The environment is fully adversarial examination interview However,in practice: We are not always interested in the worst-case scenario Environments can exhibit specific patterns:gradual change,periodicity... We are after some more problem-dependent guarantees. Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 8

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 8 Beyond the Worst-Case Analysis • All above regret guarantees hold against the worst case • Matching the minimax optimality • The environment is fully adversarial interview oblivious adversary adaptive adversary examination We are after some more problem-dependent guarantees. • However, in practice: • We are not always interested in the worst-case scenario • Environments can exhibit specific patterns: gradual change, periodicity…

Beyond the Worst-Case Analysis Beyond the worst-case analysis,achieving more adaptive results. (1)adaptivity:achieving better guarantees in easy problem instances; (2)robustness:maintaining the same worst-case guarantee. VS OPTIMISTS PESSIMISTS Be cautiously optimistic Real world Easy Data Worst-Case Data [Slides from Dylan Foster,Adaptive Online Learning @NIPS'15 workshop] Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 9

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 9 Beyond the Worst-Case Analysis • Beyond the worst-case analysis, achieving more adaptive results. (1) adaptivity: achieving better guarantees in easy problem instances; (2) robustness: maintaining the same worst-case guarantee. [Slides from Dylan Foster, Adaptive Online Learning @NIPS’15 workshop]

Prediction with Expert Advice ·Recall the PEA setup At each round t=1,2,... (1)the player first picks a weight p from a simplex AN; (2)and simultaneously environments pick a loss vector eERN; (3)the player suffers loss f(p:)A(p:,e),observes e and updates the model. Performance measure:regret T T Regretr∑p,lr) min benchmark the performance t=1 iE[N] =1 with respect to the best expert Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 10

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 10 Prediction with Expert Advice • Recall the PEA setup • Performance measure: regret benchmark the performance with respect to the best expert

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 07 Online Mirror Descent - OMD framework, regret analysis, primal-dual view, mirror map, FTRL, dual averaging.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 06 Prediction with Expert Advice - Hedge, minimax bound, lower bound; mirror descent(motivation and preliminary).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 05 Online Convex Optimization - OGD, convex functions, strongly convex functions, online Newton step, exp-concave functions.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 04 GD Methods II - GD method, smooth optimization, Nesterov’s AGD, composite optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 03 GD Methods I - GD method, Lipschitz optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 02 Convex Optimization Basics; Function Properties.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 01 Introduction; Mathematical Background.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)11 图像特征分析.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)10 图像分割.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)09 形态学及其应用.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)08 压缩编码.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)07 频域滤波器.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)06 图像频域变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)05 代数运算与几何变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)04 图像复原及锐化.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)03 灰度直方图与点运算.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)02 二值图像与像素关系.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)01 概述 Digital Image Processing.pdf

- 人工智能相关文献资料:Adaptivity and Non-stationarity - Problem-dependent Dynamic Regret for Online Convex Optimization.pdf

- 北京大学出版社:21世纪全国应用型本科电子通信系列《MATLAB基础及其应用教程》实用规划教材(共八章,2007,编著:周开利等).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 09 Optimistic Online Mirror Descent - optimistic online learning, predictable sequence, small-loss bound, gradient-variance bound, gradient-variation bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 10 Online Learning in Games - two-player zero-sum games, repeated play, minimax theorem, fast convergence.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 11 Adversarial Bandits - MAB, IW estimator, Exp3, lower bound, BCO, gradient estimator, self-concordant barrier.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 12 Stochastic Bandits - MAB, UCB, linear bandits, self-normalized concentration, generalized linear bandits.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 13 Advanced Topics - non-stationary online learning, universal online learning, online ensemble, base algorithm, meta algorithm.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)课程简介 Combinatorics Introduction(主讲:尹一通).pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)基本计数 Basic enumeration.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)生成函数 Generating functions.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)筛法 Sieve methods.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Cayley公式 Cayley's formula.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Pólya计数法 Pólya's theory of counting.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Ramsey理论 Ramsey theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)存在性问题 Existence problems.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值图论 Extremal graph theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值集合论 Extremal set theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)概率法 The probabilistic method.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)匹配论 Matching theory.pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)01 基础(主讲:詹德川).pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)02 典型方法.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目一 走进互联网营销.pdf