南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 10 Online Learning in Games - two-player zero-sum games, repeated play, minimax theorem, fast convergence

NJUA 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 10.Online Learning in Games Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 10. Online Learning in Games Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline Two-player Zero-sum Games Minimax Theorem ·Repeated Play Faster Convergence via Adaptivity Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 2

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 2 Outline • Two-player Zero-sum Games • Minimax Theorem • Repeated Play • Faster Convergence via Adaptivity

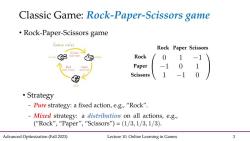

Classic Game:Rock-Paper-Scissors game Rock-Paper-Scissors game Game rules Rock Paper Scissors Seissoys apar Rock 01-1 Paper Paper 0 Scissors Strategy -Pure strategy:a fixed action,e.g.,"Rock". -Mixed strategy:a distribution on all actions,e.g., ("Rock","Paper","Scissors")=(1/3,1/3,1/3). Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 3

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 3 Classic Game: Rock-Paper-Scissors game • Rock-Paper-Scissors game • Strategy Rock Paper Scissors Rock Paper Scissors

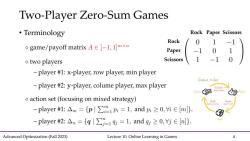

Two-Player Zero-Sum Games Terminology Rock Paper Scissors Rock 0 1 game/payoff matrix A[-1,1]mxm Paper -1 0 1 two players Scissors 1 -1 0 -player #1:x-player,row player,min player Game rules -player #2:y-player,colume player,max player action set(focusing on mixed strategy) -player#1:△m={p|∑1p:=1,andp:≥0,i∈[m}. -player#2:△n={g∑-1g=1,andg≥0,j∈m. Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 4

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 4 Two-Player Zero-Sum Games • Terminology Rock Paper Scissors Rock Paper Scissors

Two-Player Zero-Sum Games ·The protocol: The repeated game is denoted by a(payoff)matrix A[-1,1]mxm. The x-player has m actions,and the y-player has n actions. The goal of x-player is to minimize her loss and the goal of y-player is to maximize her reward. ·Given the action(x,y)∈△m×△n,the loss and reward are the same. -expected loss of x-player isosAy. -expected reward of y-player is Ereward]AAy. Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 5

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 5 Two-Player Zero-Sum Games • The protocol: •

Nash Equilibrium What is a desired state for the two players in games? Definition 2 (Nash equilibrium).A mixed strategy (x*,y*)is called a Nash equilibrium if neither player has an incentive to change her strategy given that the opponent is keeping hers,,i.e,for all x∈△n and y∈△n,it holds that x*TAy≤x*TAy*≤xTAy*. Player-y's goal is to maximize her reward,changing from y*to y will decrease reward. Player-x's goal is to minimize her loss,changing from x*to x will increase loss. Does the Nash equilibrium always exist for zero-sum games? Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 6

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 6 Nash Equilibrium • What is a desired state for the two players in games? Does the Nash equilibrium always exist for zero-sum games?

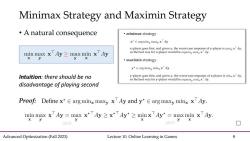

Minimax Strategy and Maximin Strategy ·ninimax strategy x*∈arg minx maxy x Ay x-player goes first,and given x,the worst-case response of y-player is maxy xAy, so the best way for x-player would be argmin,maxy xTAy. ·naximin strategy y*∈arg maxy minx x Ay y-player goes first,and given y,the worst-case response of x-player is minxxAy, so the best way for y-player would be argmaxy minxx Ay. Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 7

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 7 Minimax Strategy and Maximin Strategy • minimax strategy • maximin strategy

Minimax Strategy and Maximin Strategy A natural consequence ·ninimax strategy x*∈arg minx maxy x Ay x-player goes first,and given x,the worst-case response of y-player is maxy xAy, min max x Ay max min x Ay so the best way for x-player would be argmin maxy xAy. y ·naximin strategy y*E arg maxy minx xAy Intuition:there should be no y-player goes first,and given y,the worst-case response of x-player is minxxAy, so the best way for y-player would be argmaxy minxxAy. disadvantage of playing second Proof:Define x*E arg minx maxy x Ay and y*E argmaxy minx xAy. min max x Ay max x*Ay x*Ay*>minx Ay*max min x Ay. y y (def) (def) Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 8

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 8 Minimax Strategy and Maximin Strategy • A natural consequence Intuition: there should be no disadvantage of playing second Proof: (def) (def)

Von Neumann's Minimax Theorem For two-player zero-sum games,it is kind of surprising that the reverse direction is also true and thus minimax equals to maximin. Theorem 1.For any two-player zero-sum game A-1,1mxr,we have min max x Ay max min x Ay. x y The original proof relies on a fixed-point theorem(which is highly non-trivial) Here gives a simple and constructive proof by running an online learning algo. Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 9

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 9 Von Neumann’s Minimax Theorem • For two-player zero-sum games, it is kind of surprising that the reverse direction is also true and thus minimax equals to maximin. The original proof relies on a fixed-point theorem (which is highly non-trivial). Here gives a simple and constructive proof by running an online learning algo

Connection with Online Learning Recall the OCO framework,regret notion,and the history bits. Online Conv Another Viey History:Two-Player Zero-Sum Games ·OCO framework ·Ultimate goal::min ·feasible domain is Theory of repeated game Zero-sum 2-person games played more than once ·online functions ar 。The cumulative los · At each round t =1.2 so we need a bencl (1)the player first p Regr 黄gndt=I2, (2)and environmen ·The pl业yer auflers os -ain of oppone国 (3)the player suffe ·We hope the regret Learing to play a game1451 ercaneam from opponet'shistory of p cic updates the mod Payme epeda pobly sbotimalopponent Regret红→0asT From this point forward,we t T Nioolo Cesa-Bianchi,Online Leaming and Online Convex Optimization.Tutorial at the Simons Institute 2017 Advanced Optimization (Fall 2023) Advancod Optimization (Fall 2023) Advanced Optimization (Fall 2023) Lecture 5.Online Convex Optimization Advanced Optimization(Fall 2023) Lecture 10.Online Learning in Games 10

Advanced Optimization (Fall 2023) Lecture 10. Online Learning in Games 10 Connection with Online Learning • Recall the OCO framework, regret notion, and the history bits

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 09 Optimistic Online Mirror Descent - optimistic online learning, predictable sequence, small-loss bound, gradient-variance bound, gradient-variation bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 08 Adaptive Online Convex Optimization - problem-dependent guarantee, small-loss bound, self-confident tuning, small-loss OCO, self-bounding property bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 07 Online Mirror Descent - OMD framework, regret analysis, primal-dual view, mirror map, FTRL, dual averaging.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 06 Prediction with Expert Advice - Hedge, minimax bound, lower bound; mirror descent(motivation and preliminary).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 05 Online Convex Optimization - OGD, convex functions, strongly convex functions, online Newton step, exp-concave functions.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 04 GD Methods II - GD method, smooth optimization, Nesterov’s AGD, composite optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 03 GD Methods I - GD method, Lipschitz optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 02 Convex Optimization Basics; Function Properties.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 01 Introduction; Mathematical Background.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)11 图像特征分析.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)10 图像分割.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)09 形态学及其应用.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)08 压缩编码.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)07 频域滤波器.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)06 图像频域变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)05 代数运算与几何变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)04 图像复原及锐化.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)03 灰度直方图与点运算.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)02 二值图像与像素关系.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)01 概述 Digital Image Processing.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 11 Adversarial Bandits - MAB, IW estimator, Exp3, lower bound, BCO, gradient estimator, self-concordant barrier.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 12 Stochastic Bandits - MAB, UCB, linear bandits, self-normalized concentration, generalized linear bandits.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 13 Advanced Topics - non-stationary online learning, universal online learning, online ensemble, base algorithm, meta algorithm.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)课程简介 Combinatorics Introduction(主讲:尹一通).pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)基本计数 Basic enumeration.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)生成函数 Generating functions.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)筛法 Sieve methods.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Cayley公式 Cayley's formula.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Pólya计数法 Pólya's theory of counting.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Ramsey理论 Ramsey theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)存在性问题 Existence problems.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值图论 Extremal graph theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值集合论 Extremal set theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)概率法 The probabilistic method.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)匹配论 Matching theory.pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)01 基础(主讲:詹德川).pdf

- 南京大学:《高级机器学习》课程教学资源(课件讲稿)02 典型方法.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目一 走进互联网营销.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目二 互联网营销市场调研.pdf

- 《互联网营销理论与工具运用》课程教学资源(教案)项目三 互联网搜索引擎营销.pdf