南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 05 Online Convex Optimization - OGD, convex functions, strongly convex functions, online Newton step, exp-concave functions

版像 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 5.Online Convex Optimization Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 5. Online Convex Optimization Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline Online Learning Online Convex Optimization ·Convex Functions Strongly Convex Functions Exp-concave Functions Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 2

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 2 Outline • Online Learning • Online Convex Optimization • Convex Functions • Strongly Convex Functions • Exp-concave Functions

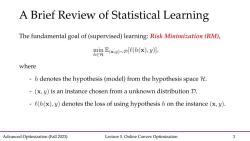

A Brief Review of Statistical Learning The fundamental goal of(supervised)learning:Risk Minimization(RM), minE(h(x),y)], h∈H where -h denotes the hypothesis(model)from the hypothesis space Ht. -(x,y)is an instance chosen from a unknown distribution D. -e(h(x),y)denotes the loss of using hypothesis h on the instance(x,y). Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 3

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 3 A Brief Review of Statistical Learning

a Brief Review of Statistical Learning Given a loss function f and distribution D,the expected risk of predictor h is R(h)=E(xD[(h(x),y)]. In practice,we can only access to a sample set S={(x1,1),...,(xm,Um)} Thus,the following empirical risk is naturally defined: Rsm=工x.8 77 i=1 Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 4

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 4 A Brief Review of Statistical Learning

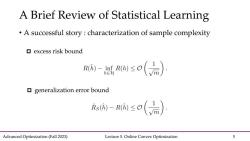

A Brief Review of Statistical Learning A successful story characterization of sample complexity ▣excess risk bound -恶so(a) generalization error bound R,a-≤o(元) Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 5

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 5 A Brief Review of Statistical Learning • A successful story : characterization of sample complexity p excess risk bound p generalization error bound

Offline Towards Online Learning Traditional statistical machine learning The training data are available offline Learning model is trained based on the offline data in a batch setting Online learning scenario In real applications,data are in the form of stream New data are being collected all the time:after observing a new data point,the model should be online updated at a constant cost Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 6

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 6 Offline Towards Online Learning • Traditional statistical machine learning • The training data are available offline • Learning model is trained based on the offline data in a batch setting • Online learning scenario • In real applications, data are in the form of stream • New data are being collected all the time: after observing a new data point, the model should be online updated at a constant cost

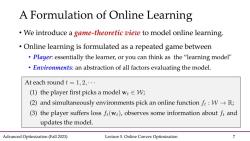

A Formulation of Online Learning We introduce a game-theoretic view to model online learning. Online learning is formulated as a repeated game between Player:essentially the learner,or you can think as the"learning model" Environments:an abstraction of all factors evaluating the model. At each round t =1,2,... (1)the player first picks a model wEW; (2)and simultaneously environments pick an online function f:w->R; (3)the player suffers loss f(wt),observes some information about fr and updates the model. Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 7

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 7 A Formulation of Online Learning • We introduce a game-theoretic view to model online learning. • Online learning is formulated as a repeated game between • Player: essentially the learner, or you can think as the “learning model" • Environments: an abstraction of all factors evaluating the model

Online Learning:Formulation At each round t=1,2,... (1)the player first picks a model wW; (2)and simultaneously environments pick an online function fr:W->R; (3)the player suffers loss ft(wt),observes some information about ft and updates the model. ·An example of online function f:W→R. Considering the task of online classification,we have (i)the loss e:Jy×Jy→R,and fi(w)=l(h(w;x:),) (i)the hypothesis function h:W×X→). =e(w xt,Ut)for simplicity Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 8

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 8 Online Learning: Formulation for simplicity • Considering the task of online classification, we have

Online Learning:Formulation At each round t=l,2,· (1)the player first picks a model wtW; (2)and simultaneously environments pick an online function fr:W->R; (3)the player suffers loss fi(wt),observes some information about fi and updates the model. Spam filtering (1)Player submits a spam classifier w ↓ (2)A mail is revealed whether it is a spam ☒ (3)Player suffers loss fi(w)and updates model Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 9

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 9 Online Learning: Formulation Spam filtering

Applications spam detection(online classification/regression):At each timet=1,2,... ·receive an email xt∈R, ·predict whether it is a spam∈{-l,+li SPAM 。see its true label y∈{-1,+1l} aggregating weather prediction(the expert problem):At each day t=1,2,... obtain temperature predictions from N models; make the final prediction by randomly following a model according to the probability p∈△w; on the next day observe the loss of each model f0,1]N. Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 10

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 10 Applications

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 04 GD Methods II - GD method, smooth optimization, Nesterov’s AGD, composite optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 03 GD Methods I - GD method, Lipschitz optimization.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 02 Convex Optimization Basics; Function Properties.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 01 Introduction; Mathematical Background.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)11 图像特征分析.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)10 图像分割.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)09 形态学及其应用.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)08 压缩编码.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)07 频域滤波器.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)06 图像频域变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)05 代数运算与几何变换.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)04 图像复原及锐化.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)03 灰度直方图与点运算.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)02 二值图像与像素关系.pdf

- 南京大学:《数字图像处理》课程教学资源(课件讲义)01 概述 Digital Image Processing.pdf

- 人工智能相关文献资料:Adaptivity and Non-stationarity - Problem-dependent Dynamic Regret for Online Convex Optimization.pdf

- 北京大学出版社:21世纪全国应用型本科电子通信系列《MATLAB基础及其应用教程》实用规划教材(共八章,2007,编著:周开利等).pdf

- 《计算机应用基础》课程教学资源(参考资料)Mathematica CheatSheet.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Reference Sheet, by Giordano Fusco & Jindich Soukup.pdf

- 《计算机应用基础》课程教学资源(参考资料)MATLAB Reference Sheet, by Sherman Wiggin & Dom Dal Bello.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 06 Prediction with Expert Advice - Hedge, minimax bound, lower bound; mirror descent(motivation and preliminary).pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 07 Online Mirror Descent - OMD framework, regret analysis, primal-dual view, mirror map, FTRL, dual averaging.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 08 Adaptive Online Convex Optimization - problem-dependent guarantee, small-loss bound, self-confident tuning, small-loss OCO, self-bounding property bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 09 Optimistic Online Mirror Descent - optimistic online learning, predictable sequence, small-loss bound, gradient-variance bound, gradient-variation bound.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 10 Online Learning in Games - two-player zero-sum games, repeated play, minimax theorem, fast convergence.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 11 Adversarial Bandits - MAB, IW estimator, Exp3, lower bound, BCO, gradient estimator, self-concordant barrier.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 12 Stochastic Bandits - MAB, UCB, linear bandits, self-normalized concentration, generalized linear bandits.pdf

- 南京大学:《高级优化 Advanced Optimization》课程教学资源(讲稿)Lecture 13 Advanced Topics - non-stationary online learning, universal online learning, online ensemble, base algorithm, meta algorithm.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)课程简介 Combinatorics Introduction(主讲:尹一通).pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)基本计数 Basic enumeration.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)生成函数 Generating functions.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)筛法 Sieve methods.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Cayley公式 Cayley's formula.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Pólya计数法 Pólya's theory of counting.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)Ramsey理论 Ramsey theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)存在性问题 Existence problems.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值图论 Extremal graph theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)极值集合论 Extremal set theory.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)概率法 The probabilistic method.pdf

- 南京大学:《组合数学》课程教学资源(课堂讲义)匹配论 Matching theory.pdf