广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(激活函数 dropout)

Tips for Deep Learning

Tips for Deep Learning

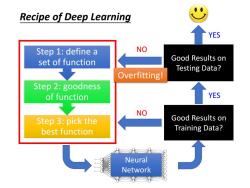

Recipe of Deep Learning YES Step 1:define a NO set of function Good Results on Testing Data? Overfitting! Step 2:goodness of function YES NO Step 3:pick the Good Results on best function Training Data? Neural Network 8

Neural Network Good Results on Testing Data? Good Results on Training Data? Step 3: pick the best function Step 2: goodness of function Step 1: define a set of function YES YES NO NO Overfitting! Recipe of Deep Learning

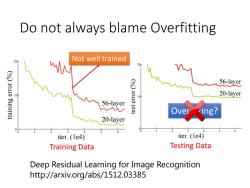

Do not always blame Overfitting Not well trained s 20 (%)I01 Sururen (%)10113 56-layer 10 20-layer 56-layer 密 Over 20-layer 6 iter.(1e4) iter.(1e4) Training Data Testing Data Deep Residual Learning for Image Recognition http://arxiv.org/abs/1512.03385

Do not always blame Overfitting Deep Residual Learning for Image Recognition http://arxiv.org/abs/1512.03385 Testing Data Overfitting? Training Data Not well trained

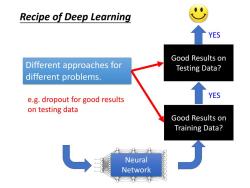

Recipe of Deep Learning YES Good Results on Different approaches for Testing Data? different problems. e.g.dropout for good results YES on testing data Good Results on Training Data? Neural Network

Neural Network Good Results on Testing Data? Good Results on Training Data? YES YES Recipe of Deep Learning Different approaches for different problems. e.g. dropout for good results on testing data

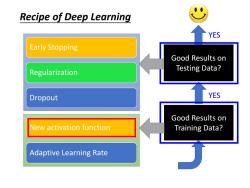

Recipe of Deep Learning YES Early Stopping Good Results on Regularization Testing Data? Dropout YES Good Results on New activation function Training Data? Adaptive Learning Rate

Good Results on Testing Data? Good Results on Training Data? YES YES Recipe of Deep Learning New activation function Adaptive Learning Rate Early Stopping Regularization Dropout

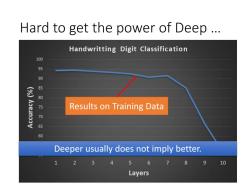

Hard to get the power of Deep .. Handwritting Digit Classification 100 8 90 50万 Results on Training Data 705 60 Deeper usually does not imply better. 2 3 4 5 6 8 910 Layers

Hard to get the power of Deep … Deeper usually does not imply better. Results on Training Data

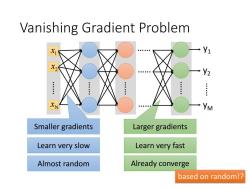

Vanishing Gradient Problem Y1 V2 : YM Smaller gradients Larger gradients Learn very slow Learn very fast Almost random Already converge based on random!?

Vanishing Gradient Problem Larger gradients Almost random Already converge based on random!? Learn very slow Learn very fast 1 x 2 x …… Nx…… …… …… …… …… …… …… y1 y2 yM Smaller gradients

Vanishing Gradient Problem Smaller gradients Small output 0.5 Large +△w input Intuitive way to compute the derivatives .. al △L △W

Vanishing Gradient Problem 1 x 2 x …… Nx…… …… …… …… …… …… …… 𝑦1 𝑦2 𝑦𝑀 …… 𝑦 ො 1 𝑦 ො 2 𝑦 ො 𝑀 𝑙 Intuitive way to compute the derivatives … 𝜕𝑙 𝜕𝑤 =? +∆𝑤 +∆𝑙 ∆𝑙 ∆𝑤 Smaller gradients Large input Small output

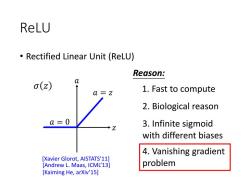

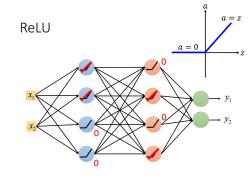

ReLU Rectified Linear Unit (ReLU) Reason: a o(Z) a=z 1.Fast to compute 2.Biological reason a=0 3.Infinite sigmoid with different biases 4.Vanishing gradient [Xavier Glorot,AISTATS'11] [Andrew L.Maas,ICML'13] problem [Kaiming He,arXiv'15]

ReLU • Rectified Linear Unit (ReLU) Reason: 1. Fast to compute 2. Biological reason 3. Infinite sigmoid with different biases 4. Vanishing gradient problem 𝑧 𝑎 𝑎 = 𝑧 𝑎 = 0 𝜎 𝑧 [Xavier Glorot, AISTATS’11] [Andrew L. Maas, ICML’13] [Kaiming He, arXiv’15]

a 三Z ReLU a=0 2 2 X2

ReLU1 x 2 x 1 y2 y 00 00 𝑧 𝑎 𝑎 = 𝑧 𝑎 = 0

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(梯度消失和梯度爆炸BN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(自适应学习率 AdaGrad RMSProp).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(batch和动量Momentum NAG).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(梯度下降、学习率adagrad adam、随机梯度下降、特征缩放).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(损失函数).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第8讲 集成学习(决策树的演化).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第7讲 集成学习(决策树).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第6讲 线性回归模型及其求解方法 Linear Regression Model and Its Solution.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.4 朴素?叶斯分类器).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.3 ?持向量机 SVM).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第4讲 分类问题(4.1 分类与回归问题概述 4.2 分类性能度量?法).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第3讲 特征工程 Feature Engineering.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第2讲 模型评估与选择.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第1讲 机器学习概述.pdf

- 《机器学习》课程教学资源:《大语言模型》参考书籍PDF电子版 THE CHINESE BOOK FOR LARGE LANGUAGE MODELS(共十三章).pdf

- 《机器学习》课程教学资源:《Python数据科学手册》参考书籍PDF电子版(2016)Python Data Science Handbook,Essential Tools for Working with Data,Jake VanderPlas.pdf

- 《机器学习》课程教学资源:《统计学习方法》参考书籍PDF电子版(清华大学出版社,第2版,共22章,作者:李航).pdf

- 《机器学习》课程教学资源:《神经网络与深度学习》参考书籍PDF电子版 Neural Networks and Deep Learning(共十五章).pdf

- 《机器学习》课程教学资源:《机器学习》参考书籍PDF电子版(清华大学出版社,著:周志华).pdf

- 《机器学习》课程教学资源:《动手学深度学习》参考书籍PDF电子版 Release 2.0.0-beta0(共十六章).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第11讲 感知机模型与多层感知机(前馈神经网络,DNN BP).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(卷积和池化层).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(LeNet, AlexNet, VGG和NiN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(Inception, 批量归一化和残差网络ResNet).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(目标检测,计算机视觉训练技巧).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第14讲 循环神经网络(RNN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(PCA Kmeans).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(Neighbor Embedding,LLE T-SNE).pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(高级循环神经网络).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(编码器解码器,Seq2seq模型,束搜索).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(嵌入向量, 词嵌入, 子词嵌入, 全局向量的词嵌入).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第17讲 注意力机制(概述).pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第17讲 注意力机制(自注意力).pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第18讲 变换器模型 Transformer.pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第18讲 变换器模型 Transformer.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(Vision Transformers ,ViTs).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(各式各样的Attention).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第20讲 预训练模型 Pre-training of Deep Bidirectional Transformers for Language Understanding(授课:周郭许).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第21讲 生成式网络模型(自编码器 Deep Auto-encoder).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第21讲 生成式网络模型(VAE Generation).pdf