广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(梯度消失和梯度爆炸BN)

Quick Introduction of Batch Normalization Hung-yi Lee李宏毅 1

Quick Introduction of Batch Normalization Hung-yi Lee 李宏毅 1

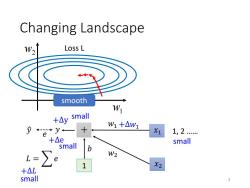

Changing Landscape W2 Loss L smooth W +△y small W1+△W1 e X1 1,2 +△ small mall b L= W2 +△L 1 X2 small 2

Changing Landscape 1 + 1, 2 …… w1 w2 Loss L 𝑦 ො 𝑦 𝑒 𝑏 𝑤1 𝑤2 𝐿 = 𝑒 small 𝑥1 𝑥2 +∆𝑤1 +∆y +∆e +∆𝐿 small smooth small small 2

Changing Landscape Loss L Loss L smooth +△y large W1 y← X1 e 1,2 +△e small same large b L= W2 range △W2 X2 100,200 +△L .s. large large

Changing Landscape 1 + 1, 2 …… 100, 200 …… w1 w2 Loss L w1 w2 Loss L 𝑦 ො 𝑦 𝑒 𝑏 𝑤1 𝑤2 𝐿 = 𝑒 small large 𝑥1 𝑥2 +∆𝑤2 +∆y +∆e +∆𝐿 large smooth steep same range large large 3

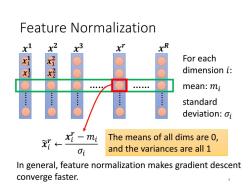

Feature normalization 3 x For each x dimension i: mean:mi : standard deviation:oi x{-mi The means of all dims are 0, ← Oi and the variances are all 1 In general,feature normalization makes gradient descent converge faster. 4

Feature Normalization ……………… …… …… …… …… 𝒙 𝟏 𝒙 𝟐 𝒙 𝟑 𝒙 𝒓 𝒙 𝑹 mean: 𝑚𝑖 standard deviation: 𝜎𝑖 𝒙𝑖 𝒓 ← 𝒙𝑖 𝒓 − 𝑚𝑖 𝜎𝑖 The means of all dims are 0, and the variances are all 1 For each dimension 𝑖: 𝒙1 𝟏 𝒙2 𝟏 𝒙1 𝟐 𝒙2 𝟐 In general, feature normalization makes gradient descent converge faster. 4

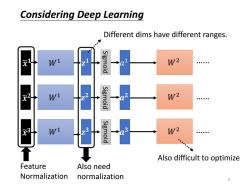

Considering Deep Learning Different dims have different ranges. Wi igmoid W2 元2 W1 Sigmoid W2 。。。。e 3 W1 63 Sigmoid W2 Also difficult to optimize Feature Also need Normalization normalization

𝒂 𝟑 𝒂 𝟐 𝑎 𝑊1 1 𝑊1 𝑊1 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 𝑊2 𝑊2 𝑊2 Sigmoid …… …… …… Sigmoid Sigmoid Feature Normalization 𝒙 𝟏 𝒙 𝟐 𝒙 𝟑 Also need normalization Different dims have different ranges. Also difficult to optimize Considering Deep Learning 5

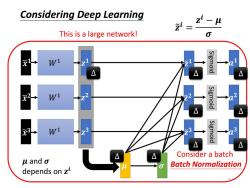

Considering Deep Learning 3 Wi 3 W1 ∑a-w2 3 W1 6

𝑊1 𝑊1 𝑊1 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 𝝁 𝝈 𝝁 = 1 3 𝑖=1 3 𝒛 𝒊 𝝈 = 1 3 𝑖=1 3 𝒛 𝒊 − 𝝁 2 𝒙 𝟏 𝒙 𝟐 𝒙 𝟑 Considering Deep Learning 6

Considering Deep Learning z2-0 This is a large network! G ≌ Wi igmoid Wi 2 22 Sigmoid 3 W1 Sigmoid Consider a batch uand o Batch Normalization depends on zi

𝑊1 𝑊1 𝑊1 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 𝝁 𝝈 𝒛 𝒊 = 𝒛 𝒊 − 𝝁 𝝈 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 Sigmoid Sigmoid Sigmoid 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 This is a large network! 𝒙 𝟏 𝒙 𝟐 𝒙 𝟑 𝝁 and 𝝈 depends on 𝒛 𝒊 Considering Deep Learning Consider a batch Batch Normalization 7 ∆ ∆ ∆ ∆ ∆ ∆ ∆ ∆ ∆

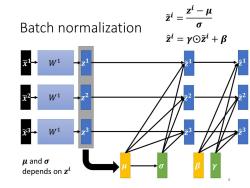

刘、 z2- Batch normalization 0 2=Y⊙z+B W1 W1 2 ≥2 22 3 W1 3 3 63 u and o depends on zt B

Batch normalization 𝑊1 𝑊1 𝑊1 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 𝝁 𝝈 𝒛 ො 𝒊 = 𝜸⨀𝒛 𝒊 + 𝜷 𝒛 ො 𝟑 𝒛 ො 𝟐 𝒛 ො 𝟏 𝒛 𝟏 𝒛 𝟐 𝒛 𝟑 𝜷 𝜸 𝒛 𝒊 = 𝒛 𝒊 − 𝝁 𝝈 𝝁 and 𝝈 depends on 𝒛 𝒊 𝒙 𝟏 𝒙 𝟐 𝒙 𝟑 8

Batch normalization -Testing i= z-汉 Wi 衣ā Z u,o are from batch? We do not always have batch at testing stage. Computing the moving average of u and o of the batches during training. ul 2 心3 π←pπ+(1-p)u

Batch normalization – Testing 𝒙 𝑊1 𝒛 𝒛 𝒛 = 𝒛 − 𝝁 𝝈 𝝁, 𝝈 are from batch? We do not always have batch at testing stage. Computing the moving average of 𝝁 and 𝝈 of the batches during training. …… 𝝁ഥ 𝝈ഥ 𝝁 𝟏 𝝁 𝟐 𝝁 𝟑 𝝁 …… 𝒕 𝝁ഥ ← 𝑝𝝁ഥ + 1 − 𝑝 𝝁 𝒕

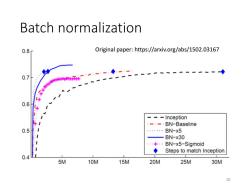

Batch normalization 0.8 Original paper:https://arxiv.org/abs/1502.03167 0.7 0.6 -Inception --BN-Baseline 0.5 ,。。gt,。 BN-x5 BN-x30 BN-x5-Sigmoid ◆ Steps to match Inception 0.4 5M 10M 15M 20M 25M 30M 10

Batch normalization Original paper: https://arxiv.org/abs/1502.03167 10

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(自适应学习率 AdaGrad RMSProp).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(batch和动量Momentum NAG).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(梯度下降、学习率adagrad adam、随机梯度下降、特征缩放).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(损失函数).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第8讲 集成学习(决策树的演化).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第7讲 集成学习(决策树).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第6讲 线性回归模型及其求解方法 Linear Regression Model and Its Solution.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.4 朴素?叶斯分类器).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.3 ?持向量机 SVM).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第4讲 分类问题(4.1 分类与回归问题概述 4.2 分类性能度量?法).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第3讲 特征工程 Feature Engineering.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第2讲 模型评估与选择.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第1讲 机器学习概述.pdf

- 《机器学习》课程教学资源:《大语言模型》参考书籍PDF电子版 THE CHINESE BOOK FOR LARGE LANGUAGE MODELS(共十三章).pdf

- 《机器学习》课程教学资源:《Python数据科学手册》参考书籍PDF电子版(2016)Python Data Science Handbook,Essential Tools for Working with Data,Jake VanderPlas.pdf

- 《机器学习》课程教学资源:《统计学习方法》参考书籍PDF电子版(清华大学出版社,第2版,共22章,作者:李航).pdf

- 《机器学习》课程教学资源:《神经网络与深度学习》参考书籍PDF电子版 Neural Networks and Deep Learning(共十五章).pdf

- 《机器学习》课程教学资源:《机器学习》参考书籍PDF电子版(清华大学出版社,著:周志华).pdf

- 《机器学习》课程教学资源:《动手学深度学习》参考书籍PDF电子版 Release 2.0.0-beta0(共十六章).pdf

- 西北农林科技大学:《Visual Basic程序设计基础》课程教学资源(PPT课件)第07章 数据文件.ppt

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(激活函数 dropout).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第11讲 感知机模型与多层感知机(前馈神经网络,DNN BP).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(卷积和池化层).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(LeNet, AlexNet, VGG和NiN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(Inception, 批量归一化和残差网络ResNet).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(目标检测,计算机视觉训练技巧).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第14讲 循环神经网络(RNN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(PCA Kmeans).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(Neighbor Embedding,LLE T-SNE).pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(高级循环神经网络).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(编码器解码器,Seq2seq模型,束搜索).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(嵌入向量, 词嵌入, 子词嵌入, 全局向量的词嵌入).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第17讲 注意力机制(概述).pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第17讲 注意力机制(自注意力).pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第18讲 变换器模型 Transformer.pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第18讲 变换器模型 Transformer.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(Vision Transformers ,ViTs).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(各式各样的Attention).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第20讲 预训练模型 Pre-training of Deep Bidirectional Transformers for Language Understanding(授课:周郭许).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第21讲 生成式网络模型(自编码器 Deep Auto-encoder).pdf