广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(Neighbor Embedding,LLE T-SNE)

Unsupervised Learning: Neighbor Embedding

Unsupervised Learning: Neighbor Embedding

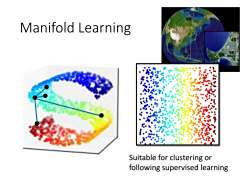

Manifold Learning Suitable for clustering or following supervised learning

Manifold Learning Suitable for clustering or following supervised learning

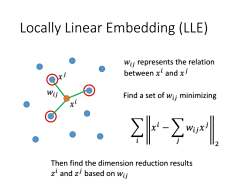

Locally Linear Embedding (LLE) Wij represents the relation between xl and x Find a set of wii minimizing k-yl Then find the dimension reduction results zi and z based on wij

Locally Linear Embedding (LLE) 𝑥 𝑖 𝑥 𝑗 𝑤𝑖𝑗 𝑤𝑖𝑗 represents the relation between 𝑥 𝑖 and 𝑥 𝑗 Find a set of 𝑤𝑖𝑗 minimizing 𝑖 𝑥 𝑖 − 𝑗 𝑤𝑖𝑗𝑥 𝑗 2 Then find the dimension reduction results 𝑧 𝑖 and 𝑧 𝑗 based on 𝑤𝑖𝑗

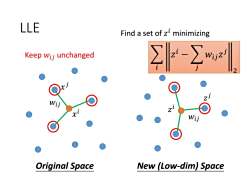

LLE Find a set of z minimizing Keep wij unchanged -Σ Wi Original Space New (Low-dim)Space

LLE 𝑥 𝑖 𝑥 𝑗 𝑤𝑖𝑗 𝑤𝑖𝑗 𝑧 𝑗 𝑧 𝑖 Original Space New (Low-dim) Space Find a set of 𝑧 𝑖 minimizing Keep 𝑤𝑖𝑗 unchanged 𝑖 𝑧 𝑖 − 𝑗 𝑤𝑖𝑗𝑧 𝑗 2

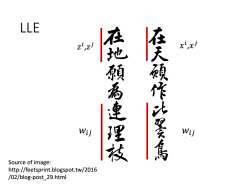

LLE xt,xi Wij 在地領為連理校 在天顧作出墨焉 Wij Source of image: http://feetsprint.blogspot.tw/2016 /02/blog-post_29.html

LLE Source of image: http://feetsprint.blogspot.tw/2016 /02/blog-post_29.html 𝑧 𝑖 ,𝑧 𝑗 𝑤𝑖𝑗 𝑤𝑖𝑗 𝑥 𝑖 ,𝑥 𝑗

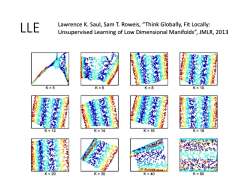

LLE Lawrence K.Saul,Sam T.Roweis,"Think Globally,Fit Locally: Unsupervised Learning of Low Dimensional Manifolds",JMLR,2013 K=5 K=6 K= K=10 12 16 18 K=20 K=30 K=40 K=60

LLE Lawrence K. Saul, Sam T. Roweis, “Think Globally, Fit Locally: Unsupervised Learning of Low Dimensional Manifolds”, JMLR, 2013

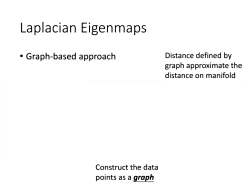

Laplacian Eigenmaps Graph-based approach Distance defined by graph approximate the distance on manifold Construct the data points as a graph

Laplacian Eigenmaps • Graph-based approach Construct the data points as a graph Distance defined by graph approximate the distance on manifold

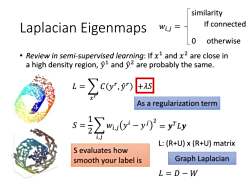

similarity Laplacian Eigenmaps Wi,j= If connected 0 otherwise Review in semi-supervised learning:If x1 and x2 are close in a high density region,1 and 2 are probably the same. t=∑co )+ As a regularization term s=2∑0-'=yy L:(R+U)x(R+U)matrix S evaluates how smooth your label is Graph Laplacian L=D-W

Laplacian Eigenmaps • Review in semi-supervised learning: If 𝑥 1 and 𝑥 2 are close in a high density region, 𝑦 ො 1 and 𝑦 ො 2 are probably the same. 𝐿 = 𝑥 𝑟 𝐶 𝑦 𝑟 , 𝑦 ො 𝑟 +𝜆𝑆 As a regularization term = 𝒚 𝑇 𝑆 = 𝐿𝒚 1 2 𝑖,𝑗 𝑤𝑖,𝑗 𝑦 𝑖 − 𝑦 𝑗 2 L: (R+U) x (R+U) matrix Graph Laplacian 𝐿 = 𝐷 − 𝑊 S evaluates how smooth your label is 𝑤𝑖,𝑗 = 0 similarity If connected otherwise

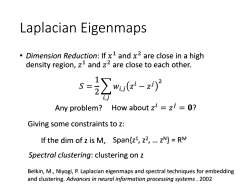

Laplacian Eigenmaps Dimension Reduction:If x1 and x2 are close in a high density region,z1 and z2 are close to each other. s=∑ue-z Any problem?How about zi=z=0? Giving some constraints to z: If the dim of z is M,Span(z1,z2,...N)=RM Spectral clustering:clustering on z Belkin,M.,Niyogi,P.Laplacian eigenmaps and spectral techniques for embedding and clustering.Advances in neural information processing systems.2002

Laplacian Eigenmaps • Dimension Reduction: If 𝑥 1 and 𝑥 2 are close in a high density region, 𝑧 1 and 𝑧 2 are close to each other. 𝑆 = 1 2 𝑖,𝑗 𝑤𝑖,𝑗 𝑧 𝑖 − 𝑧 𝑗 2 Spectral clustering: clustering on z Any problem? How about 𝑧 𝑖 = 𝑧 𝑗 = 𝟎? Giving some constraints to z: If the dim of z is M, Span{z1 , z2 , … z N} = RM Belkin, M., Niyogi, P. Laplacian eigenmaps and spectral techniques for embedding and clustering. Advances in neural information processing systems . 2002

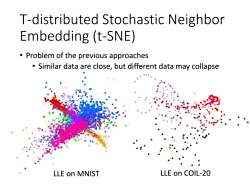

T-distributed Stochastic Neighbor Embedding (t-SNE) Problem of the previous approaches Similar data are close,but different data may collapse LLE on MNIST LLE on COIL-20

T-distributed Stochastic Neighbor Embedding (t-SNE) • Problem of the previous approaches • Similar data are close, but different data may collapse LLE on MNIST LLE on COIL-20

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第15讲 无监督学习——降维深度学习可视化(PCA Kmeans).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第14讲 循环神经网络(RNN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(目标检测,计算机视觉训练技巧).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第13讲 卷积神经网络计算机视觉应用(Inception, 批量归一化和残差网络ResNet).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(LeNet, AlexNet, VGG和NiN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第12讲 卷积神经网络(卷积和池化层).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第11讲 感知机模型与多层感知机(前馈神经网络,DNN BP).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(激活函数 dropout).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(梯度消失和梯度爆炸BN).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(自适应学习率 AdaGrad RMSProp).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第10讲 神经网络的优化(batch和动量Momentum NAG).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(梯度下降、学习率adagrad adam、随机梯度下降、特征缩放).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第9讲 神经网络的优化(损失函数).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第8讲 集成学习(决策树的演化).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第7讲 集成学习(决策树).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第6讲 线性回归模型及其求解方法 Linear Regression Model and Its Solution.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.4 朴素?叶斯分类器).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第5讲 分类问题(4.3 ?持向量机 SVM).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第4讲 分类问题(4.1 分类与回归问题概述 4.2 分类性能度量?法).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第3讲 特征工程 Feature Engineering.pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(高级循环神经网络).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(编码器解码器,Seq2seq模型,束搜索).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第16讲 现代循环神经网络(嵌入向量, 词嵌入, 子词嵌入, 全局向量的词嵌入).pptx

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第17讲 注意力机制(概述).pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第17讲 注意力机制(自注意力).pdf

- 广东工业大学:《机器学习》课程教学资源(PPT讲稿)第18讲 变换器模型 Transformer.pptx

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第18讲 变换器模型 Transformer.pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(Vision Transformers ,ViTs).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第19讲 ViT及注意力机制改进(各式各样的Attention).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第20讲 预训练模型 Pre-training of Deep Bidirectional Transformers for Language Understanding(授课:周郭许).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第21讲 生成式网络模型(自编码器 Deep Auto-encoder).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第21讲 生成式网络模型(VAE Generation).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第22讲 生成式网络模型(Diffusion Model).pdf

- 广东工业大学:《机器学习》课程教学资源(课件讲义)第22讲 生成式网络模型(Stable Diffusion).pdf

- 北京信息科技大学:计算机学院各专业课程教学大纲汇编.pdf

- 北京信息科技大学:计算中心及图书馆课程教学大纲汇编.pdf

- 新乡学院:数学与统计学院信息与计算科学专业《数学分析Ⅰ》课程教学大纲(2015).pdf

- 新乡学院:数学与统计学院信息与计算科学专业《数学分析Ⅱ》课程教学大纲(2015).pdf

- 新乡学院:数学与统计学院信息与计算科学专业《数学分析Ⅲ》课程教学大纲(2015).pdf

- 新乡学院:数学与统计学院信息与计算科学专业《高等代数Ⅰ》课程教学大纲(2015).pdf