上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)Top 9 ethical issues in artificial intelligence_World Economic Forum

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. We are using cookies to give you the best experience on our site.By continuing to use our site,you are agreeing to our use of cookies. WORLD ECONOMIC FORUM Top 9 ethical issues in artificial intelligence Faced with an automated future,what moral framework should guide us? Image:Matthew Wiebe Written by Julia Bossmann,President,Foresight Institute Friday 21 October 2016 Optimizing logistics,detecting fraud,composing art,conducting research,providing translations:intelligent machine systems are transforming our lives for the better.As these systems become more capable,our world becomes more efficient and consequently richer. Tech giants such as Alphabet,Amazon,Facebook,IBM and Microsoft-as well as individuals like Stephen Hawking and Elon Musk-believe that now is the right time to talk about the nearly boundless landscape of artificial intelligence.In many ways,this is just as much a new frontier for ethics and risk assessment as it is for emerging technology.So which issues and conversations keep Al experts up at night? 1.Unemployment.What happens after the end of jobs? 第1頁,共7頁

Faced with an automated future, what moral framework should guide us? Image: Matthew Wiebe Written by Julia Bossmann, President, Foresight Institute Friday 21 October 2016 We are using cookies to give you the best experience on our site. By continuing to use our site, you are agreeing to our use of cookies. Optimizing logistics, detecting fraud, composing art, conducting research, providing translations: intelligent machine systems are transforming our lives for the better. As these systems become more capable, our world becomes more efficient and consequently richer. Tech giants such as Alphabet, Amazon, Facebook, IBM and Microsoft – as well as individuals like Stephen Hawking and Elon Musk – believe that now is the right time to talk about the nearly boundless landscape of artificial intelligence. In many ways, this is just as much a new frontier for ethics and risk assessment as it is for emerging technology. So which issues and conversations keep AI experts up at night? 1. Unemployment. What happens after the end of jobs? Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 1 頁,共 7 頁

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. The hierarchy of labour is concerned primarily with automation.As we've invented ways to automate jobs,we could create room for people to assume more complex roles,moving from the physical work that dominated the pre-industrial globe to the cognitive labour that characterizes strategic and administrative work in our globalized society. Look at trucking:it currently employs millions of individuals in the United States alone.What will happen to them if the self-driving trucks promised by Tesla's Elon Musk become widely available in the next decade?But on the other hand,if we consider the lower risk of accidents,self-driving trucks seem like an ethical choice.The same scenario could happen to office workers,as well as to the majority of the workforce in developed countries. This is where we come to the question of how we are going to spend our time.Most people still rely on selling their time to have enough income to sustain themselves and their families.We can only hope that this opportunity will enable people to find meaning in non-labour activities,such as caring for their families,engaging with their communities and learning new ways to contribute to human society. If we succeed with the transition,one day we might look back and think that it was barbaric that human beings were required to sell the majority of their waking time just to be able to live. 第2頁,共7頁

The hierarchy of labour is concerned primarily with automation. As we’ve invented ways to automate jobs, we could create room for people to assume more complex roles, moving from the physical work that dominated the pre-industrial globe to the cognitive labour that characterizes strategic and administrative work in our globalized society. Look at trucking: it currently employs millions of individuals in the United States alone. What will happen to them if the self-driving trucks promised by Tesla’s Elon Musk become widely available in the next decade? But on the other hand, if we consider the lower risk of accidents, self-driving trucks seem like an ethical choice. The same scenario could happen to office workers, as well as to the majority of the workforce in developed countries. This is where we come to the question of how we are going to spend our time. Most people still rely on selling their time to have enough income to sustain themselves and their families. We can only hope that this opportunity will enable people to find meaning in non-labour activities, such as caring for their families, engaging with their communities and learning new ways to contribute to human society. If we succeed with the transition, one day we might look back and think that it was barbaric that human beings were required to sell the majority of their waking time just to be able to live. Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 2 頁,共 7 頁

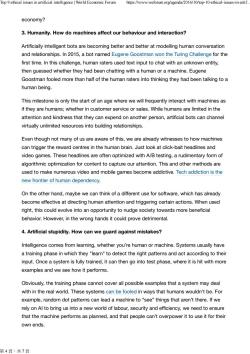

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. It's more technically feasible to automate predictable physical activities than unpredictable ones. Technical feasibility of automation,%1 Predictable physical work Unpredictable physical work 78% 25% For example,welding and soldering For example,construction, on an assembly line,food preparation, forestry,or raising outdoor or packaging objects animals 1%of time spent on activities that can be automated by adapting currently demonstrated technology. McKinsey&Company 2.Inequality.How do we distribute the wealth created by machines? Our economic system is based on compensation for contribution to the economy,often assessed using an hourly wage.The majority of companies are still dependent on hourly work when it comes to products and services.But by using artificial intelligence,a company can drastically cut down on relying on the human workforce,and this means that revenues will go to fewer people.Consequently,individuals who have ownership in Al-driven companies will make all the money. We are already seeing a widening wealth gap,where start-up founders take home a large portion of the economic surplus they create.In 2014,roughly the same revenues were generated by the three biggest companies in Detroit and the three biggest companies in Silicon Valley ..only in Silicon Valley there were 10 times fewer employees If we're truly imagining a post-work society,how do we structure a fair post-labour 第3頁,共7頁

2. Inequality. How do we distribute the wealth created by machines? Our economic system is based on compensation for contribution to the economy, often assessed using an hourly wage. The majority of companies are still dependent on hourly work when it comes to products and services. But by using artificial intelligence, a company can drastically cut down on relying on the human workforce, and this means that revenues will go to fewer people. Consequently, individuals who have ownership in AI-driven companies will make all the money. We are already seeing a widening wealth gap, where start-up founders take home a large portion of the economic surplus they create. In 2014, roughly the same revenues were generated by the three biggest companies in Detroit and the three biggest companies in Silicon Valley ... only in Silicon Valley there were 10 times fewer employees. If we’re truly imagining a post-work society, how do we structure a fair post-labour Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 3 頁,共 7 頁

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. economy? 3.Humanity.How do machines affect our behaviour and interaction? Artificially intelligent bots are becoming better and better at modelling human conversation and relationships.In 2015,a bot named Eugene Goostman won the Turing Challenge for the first time.In this challenge,human raters used text input to chat with an unknown entity, then guessed whether they had been chatting with a human or a machine.Eugene Goostman fooled more than half of the human raters into thinking they had been talking to a human being. This milestone is only the start of an age where we will frequently interact with machines as if they are humans;whether in customer service or sales.While humans are limited in the attention and kindness that they can expend on another person,artificial bots can channel virtually unlimited resources into building relationships. Even though not many of us are aware of this,we are already witnesses to how machines can trigger the reward centres in the human brain.Just look at click-bait headlines and video games.These headlines are often optimized with A/B testing,a rudimentary form of algorithmic optimization for content to capture our attention.This and other methods are used to make numerous video and mobile games become addictive.Tech addiction is the new frontier of human dependency. On the other hand,maybe we can think of a different use for software,which has already become effective at directing human attention and triggering certain actions.When used right,this could evolve into an opportunity to nudge society towards more beneficial behavior.However,in the wrong hands it could prove detrimental. 4.Artificial stupidity.How can we guard against mistakes? Intelligence comes from learning,whether you're human or machine.Systems usually have a training phase in which they "learn"to detect the right patterns and act according to their input.Once a system is fully trained,it can then go into test phase,where it is hit with more examples and we see how it performs. Obviously,the training phase cannot cover all possible examples that a system may deal with in the real world.These systems can be fooled in ways that humans wouldn't be.For example,random dot patterns can lead a machine to "see"things that aren't there.If we rely on Al to bring us into a new world of labour,security and efficiency,we need to ensure that the machine performs as planned,and that people can't overpower it to use it for their own ends. 第4頁,共7頁

economy? 3. Humanity. How do machines affect our behaviour and interaction? Artificially intelligent bots are becoming better and better at modelling human conversation and relationships. In 2015, a bot named Eugene Goostman won the Turing Challenge for the first time. In this challenge, human raters used text input to chat with an unknown entity, then guessed whether they had been chatting with a human or a machine. Eugene Goostman fooled more than half of the human raters into thinking they had been talking to a human being. This milestone is only the start of an age where we will frequently interact with machines as if they are humans; whether in customer service or sales. While humans are limited in the attention and kindness that they can expend on another person, artificial bots can channel virtually unlimited resources into building relationships. Even though not many of us are aware of this, we are already witnesses to how machines can trigger the reward centres in the human brain. Just look at click-bait headlines and video games. These headlines are often optimized with A/B testing, a rudimentary form of algorithmic optimization for content to capture our attention. This and other methods are used to make numerous video and mobile games become addictive. Tech addiction is the new frontier of human dependency. On the other hand, maybe we can think of a different use for software, which has already become effective at directing human attention and triggering certain actions. When used right, this could evolve into an opportunity to nudge society towards more beneficial behavior. However, in the wrong hands it could prove detrimental. 4. Artificial stupidity. How can we guard against mistakes? Intelligence comes from learning, whether you’re human or machine. Systems usually have a training phase in which they "learn" to detect the right patterns and act according to their input. Once a system is fully trained, it can then go into test phase, where it is hit with more examples and we see how it performs. Obviously, the training phase cannot cover all possible examples that a system may deal with in the real world. These systems can be fooled in ways that humans wouldn't be. For example, random dot patterns can lead a machine to “see” things that aren’t there. If we rely on AI to bring us into a new world of labour, security and efficiency, we need to ensure that the machine performs as planned, and that people can’t overpower it to use it for their own ends. Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 4 頁,共 7 頁

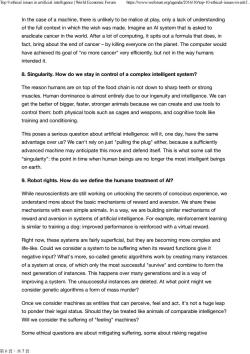

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. 5.Racist robots.How do we eliminate Al bias? Though artificial intelligence is capable of a speed and capacity of processing that's far beyond that of humans,it cannot always be trusted to be fair and neutral.Google and its parent company Alphabet are one of the leaders when it comes to artificial intelligence,as seen in Google's Photos service,where Al is used to identify people,objects and scenes. But it can go wrong,such as when a camera missed the mark on racial sensitivity,or when a software used to predict future criminals showed bias against black people. We shouldn't forget that Al systems are created by humans,who can be biased and judgemental.Once again,if used right,or if used by those who strive for social progress, artificial intelligence can become a catalyst for positive change. 6.Security.How do we keep Al safe from adversaries? The more powerful a technology becomes,the more can it be used for nefarious reasons as well as good.This applies not only to robots produced to replace human soldiers,or autonomous weapons,but to Al systems that can cause damage if used maliciously. Because these fights won't be fought on the battleground only,cybersecurity will become even more important.After all,we're dealing with a system that is faster and more capable than us by orders of magnitude. Proliferation of Armed Drones SOUTH LEBANON GAZA SINAI SRAEL USA CHINA AFGHANISJAN RAN LIBYA PAKISTAN States that have used armed drones States that possess armed drones EMEN Areas where armed drones have been used SOMALIA Source:CFR CSS Analysis in Security Policy No.164,November 2014 (Center for Security Studies,ETH Zurich) ©⊙ 7.Evil genies.How do we protect against unintended consequences? It's not just adversaries we have to worry about.What if artificial intelligence itself turned against us?This doesn't mean by turning "evil"in the way a human might,or the way Al disasters are depicted in Hollywood movies.Rather,we can imagine an advanced Al system as a "genie in a bottle"that can fulfill wishes,but with terrible unforeseen consequences 第5頁,共7頁

5. Racist robots. How do we eliminate AI bias? Though artificial intelligence is capable of a speed and capacity of processing that’s far beyond that of humans, it cannot always be trusted to be fair and neutral. Google and its parent company Alphabet are one of the leaders when it comes to artificial intelligence, as seen in Google’s Photos service, where AI is used to identify people, objects and scenes. But it can go wrong, such as when a camera missed the mark on racial sensitivity, or when a software used to predict future criminals showed bias against black people. We shouldn’t forget that AI systems are created by humans, who can be biased and judgemental. Once again, if used right, or if used by those who strive for social progress, artificial intelligence can become a catalyst for positive change. 6. Security. How do we keep AI safe from adversaries? The more powerful a technology becomes, the more can it be used for nefarious reasons as well as good. This applies not only to robots produced to replace human soldiers, or autonomous weapons, but to AI systems that can cause damage if used maliciously. Because these fights won't be fought on the battleground only, cybersecurity will become even more important. After all, we’re dealing with a system that is faster and more capable than us by orders of magnitude. 7. Evil genies. How do we protect against unintended consequences? It’s not just adversaries we have to worry about. What if artificial intelligence itself turned against us? This doesn't mean by turning "evil" in the way a human might, or the way AI disasters are depicted in Hollywood movies. Rather, we can imagine an advanced AI system as a "genie in a bottle" that can fulfill wishes, but with terrible unforeseen consequences. Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 5 頁,共 7 頁

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. In the case of a machine,there is unlikely to be malice at play,only a lack of understanding of the full context in which the wish was made.Imagine an Al system that is asked to eradicate cancer in the world.After a lot of computing,it spits out a formula that does,in fact,bring about the end of cancer-by killing everyone on the planet.The computer would have achieved its goal of "no more cancer"very efficiently,but not in the way humans intended it. 8.Singularity.How do we stay in control of a complex intelligent system? The reason humans are on top of the food chain is not down to sharp teeth or strong muscles.Human dominance is almost entirely due to our ingenuity and intelligence.We can get the better of bigger,faster,stronger animals because we can create and use tools to control them:both physical tools such as cages and weapons,and cognitive tools like training and conditioning. This poses a serious question about artificial intelligence:will it,one day,have the same advantage over us?We can't rely on just"pulling the plug"either,because a sufficiently advanced machine may anticipate this move and defend itself.This is what some call the "singularity":the point in time when human beings are no longer the most intelligent beings on earth. 9.Robot rights.How do we define the humane treatment of Al? While neuroscientists are still working on unlocking the secrets of conscious experience,we understand more about the basic mechanisms of reward and aversion.We share these mechanisms with even simple animals.In a way,we are building similar mechanisms of reward and aversion in systems of artificial intelligence.For example,reinforcement learning is similar to training a dog:improved performance is reinforced with a virtual reward. Right now,these systems are fairly superficial,but they are becoming more complex and life-like.Could we consider a system to be suffering when its reward functions give it negative input?What's more,so-called genetic algorithms work by creating many instances of a system at once,of which only the most successful "survive"and combine to form the next generation of instances.This happens over many generations and is a way of improving a system.The unsuccessful instances are deleted.At what point might we consider genetic algorithms a form of mass murder? Once we consider machines as entities that can perceive,feel and act,it's not a huge leap to ponder their legal status.Should they be treated like animals of comparable intelligence? Will we consider the suffering of "feeling"machines? Some ethical questions are about mitigating suffering,some about risking negative 第6頁,共7頁

In the case of a machine, there is unlikely to be malice at play, only a lack of understanding of the full context in which the wish was made. Imagine an AI system that is asked to eradicate cancer in the world. After a lot of computing, it spits out a formula that does, in fact, bring about the end of cancer – by killing everyone on the planet. The computer would have achieved its goal of "no more cancer" very efficiently, but not in the way humans intended it. 8. Singularity. How do we stay in control of a complex intelligent system? The reason humans are on top of the food chain is not down to sharp teeth or strong muscles. Human dominance is almost entirely due to our ingenuity and intelligence. We can get the better of bigger, faster, stronger animals because we can create and use tools to control them: both physical tools such as cages and weapons, and cognitive tools like training and conditioning. This poses a serious question about artificial intelligence: will it, one day, have the same advantage over us? We can't rely on just "pulling the plug" either, because a sufficiently advanced machine may anticipate this move and defend itself. This is what some call the “singularity”: the point in time when human beings are no longer the most intelligent beings on earth. 9. Robot rights. How do we define the humane treatment of AI? While neuroscientists are still working on unlocking the secrets of conscious experience, we understand more about the basic mechanisms of reward and aversion. We share these mechanisms with even simple animals. In a way, we are building similar mechanisms of reward and aversion in systems of artificial intelligence. For example, reinforcement learning is similar to training a dog: improved performance is reinforced with a virtual reward. Right now, these systems are fairly superficial, but they are becoming more complex and life-like. Could we consider a system to be suffering when its reward functions give it negative input? What's more, so-called genetic algorithms work by creating many instances of a system at once, of which only the most successful "survive" and combine to form the next generation of instances. This happens over many generations and is a way of improving a system. The unsuccessful instances are deleted. At what point might we consider genetic algorithms a form of mass murder? Once we consider machines as entities that can perceive, feel and act, it's not a huge leap to ponder their legal status. Should they be treated like animals of comparable intelligence? Will we consider the suffering of "feeling" machines? Some ethical questions are about mitigating suffering, some about risking negative Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 6 頁,共 7 頁

Top 9 ethical issues in artificial intelligence World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif.. outcomes.While we consider these risks,we should also keep in mind that,on the whole, this technological progress means better lives for everyone.Artificial intelligence has vast potential,and its responsible implementation is up to us. Written by Julia Bossmann,President,Foresight Institute The views expressed in this article are those of the author alone and not the World Economic Forum. Subscribe for updates A weekly update of what's on the Global Agenda Email Subscribe 2016 World Economic ForumPrivacy Policy Terms of Service 第7頁,共7頁

Subscribe outcomes. While we consider these risks, we should also keep in mind that, on the whole, this technological progress means better lives for everyone. Artificial intelligence has vast potential, and its responsible implementation is up to us. Written by Julia Bossmann, President, Foresight Institute The views expressed in this article are those of the author alone and not the World Economic Forum. A weekly update of what’s on the Global Agenda © 2016 World Economic ForumPrivacy Policy & Terms of Service Top 9 ethical issues in artificial intelligence | World Economic Forum https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artif... 第 7 頁,共 7 頁

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 上海交通大学:《逻辑学导论》课程教学资源(PPT课件)第七章 模态命题及其推理、第八章 归纳推理和类比推理.ppt

- 《邓小平理论》教学资源(参考资料)十七大新党章(中国共产党章程).doc

- 《邓小平理论》教学资源(参考资料)高举中国特色社会主义伟大旗帜 为夺取全面建设小康社会新胜利而奋斗(在中国共产党第十七次全国代表大会上的报告).doc

- 上海交通大学:《毛泽东思想、邓小平理论和“三个代表”重要思想概论》教学资源(PPT课件)绪论、马克思主义中国化的科学内涵及其历史进程.ppt

- 上海交通大学:《毛泽东思想、邓小平理论和“三个代表”重要思想概论》教学资源(PPT课件)马克思主义中国化的历史进程和理论成果.ppt

- 上海交通大学:《毛泽东思想、邓小平理论和“三个代表”重要思想概论》教学资源(PPT课件)毛泽东思想和中国特色社会主义理论体系概论课教材修订意见.ppt

- 上海交通大学:《毛泽东思想、邓小平理论和“三个代表”重要思想概论》教学资源(PPT课件)创新型国家建设与创新型人才培养专题.ppt

- 上海交通大学:《逻辑学导论》课程教学资源(作业习题)逻辑学课堂练习题(练习题).doc

- 上海交通大学:《逻辑学导论》课程教学资源(作业习题)逻辑学课堂练习题(参考答案).doc

- 上海交通大学:《逻辑学导论》课程教学资源(PPT课件)第九章 逻辑思维的基本规律、第十章 证明与反驳.ppt

- 上海交通大学:《逻辑学导论》课程教学资源(PPT课件)第五、六章 复合命题及其推理(上、下).ppt

- 上海交通大学:《逻辑学导论》课程教学资源(PPT课件)第三、四章 简单命题及其推理(上、下).ppt

- 上海交通大学:《逻辑学导论》课程教学资源(PPT课件)第一章 绪论、第二章 概念(主讲:黄伟力).ppt

- 上海中医药大学:《科学逻辑学》课程教学资源(PPT课件)第二章 科学理论发现的逻辑——科学事实.ppt

- 上海中医药大学:《科学逻辑学》课程教学资源(PPT课件)第三章 科学发现的逻辑——科学思维.ppt

- 上海中医药大学:《科学逻辑学》课程教学资源(PPT课件)概述(乔文彬)、第一章 科学理论发现的逻辑(科学问题).ppt

- 复旦大学:《心灵哲学 Philosophy of Mind》课程教学资源(PPT课件讲稿)Lecture 3 The Mind-Brain Identity Theory.ppt

- 复旦大学:《心灵哲学 Philosophy of Mind》课程教学资源(PPT课件讲稿)Lecture 15 The Philosophical Significance of Japanese Language.pptx

- 复旦大学:《心灵哲学 Philosophy of Mind》课程教学资源(PPT课件讲稿)Lecture 14 New NISHIDA and consciousness.pptx

- 复旦大学:《心灵哲学 Philosophy of Mind》课程教学资源(PPT课件讲稿)Lecture 13 Omori Shūzō and his Wittgenstein-inspired Solution to the Mind-body Problem.pptx

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学1 培根到孔德.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学2 逻辑实证主义.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学3 科学的划界.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学4 科学的合理性.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学5 后现代科学观.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(讲义)基础科学研究需要哲学滋养.docx

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)第九讲 人文主义视域中的技术形象:海德格尔等关于技术的忧思.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)第八讲 工程师视域中的技术形象:卡普与德韶尔.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)第十一讲 技术需要哲学吗?管局技术设计、接受与传播的哲学思考.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)第十讲 技术是工具吗?实用主义与批判主义视域中的技术形象.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)绪论(主讲 闫宏秀).ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学1 培根到孔德.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学2 逻辑实证主义的诞生.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学3 科学的划界.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学4 科学的合理性.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)哲学与科学5 后现代科学观.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程教学资源(PPT课件)德瑞弗斯 Hubert Dreyfus.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程PPT教学课件(哲学篇)哲学与科学1 从培根到孔德.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程PPT教学课件(哲学篇)哲学与科学2 科学宣言.ppt

- 上海交通大学:《哲学、科学、技术 Philosophy , Science and Technology》课程PPT教学课件(哲学篇)哲学与科学3 科学与非科学的划界.ppt