电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 03 MEMORY AND DATA LOCALITY

LECTURE 3-MEMORY AND DATA LOCALITY

LECTURE 3 – MEMORY AND DATA LOCALITY

Memory access efficiency Tiled Matrix Multiplication Tiled Matrix Multiplication Kernel Handling Boundary Conditions in Tiling Tiled Kernel for Arbitrary Matrix Dimensions Universityf Electr Science and TachnoloChina

Memory access efficiency Tiled Matrix Multiplication Tiled Matrix Multiplication Kernel Handling Boundary Conditions in Tiling Tiled Kernel for Arbitrary Matrix Dimensions

OBIECTIVE .To learn to effectively use the CUDA memory types in a parallel program .Importance of memory access efficiency Registers,shared memory,global memory .Scope and lifetime 电子料发女学 University of ElectriScience and TachnolopChina O

▪To learn to effectively use the CUDA memory types in a parallel program ▪ Importance of memory access efficiency ▪ Registers, shared memory, global memory ▪ Scope and lifetime

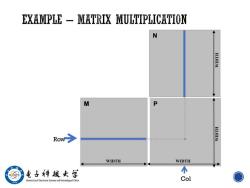

EXAMPLE-MATRIX MULTIPLICATION N H.LQIM M P Row H.LCIA WIDTH WIDTH 电子料效女学 个 niversitof Electr Science and TachnoloChina Col O

M N P WIDTH WIDTH WIDTH Row WIDTH Col

A BASIC MATRIX MULTIPLICATION global void MatrixMulKernel(float*M,float*N,float*P,int Width) /Calculate the row index of the p element and M int Row blockIdx.y*blockDim.y+threadIdx.y; /Calculate the column index of P and N int Col blockIdx.x*blockDim.x+threadIdx.x; if ((Row Width)&&(Col Width)){ float Pvalue 0; /each thread computes one element of the block sub-matrix for (int k 0;k Width;++k){ Pvalue +M[Row*Width+k]*N[k*Width+Col]; P[Row*Width+Col]Pvalue; 电子料烛女学 University of Electreaie Science and Technolory of China O

__global__ void MatrixMulKernel(float* M, float* N, float* P, int Width) { // Calculate the row index of the P element and M int Row = blockIdx.y*blockDim.y+threadIdx.y; // Calculate the column index of P and N int Col = blockIdx.x*blockDim.x+threadIdx.x; if ((Row < Width) && (Col < Width)) { float Pvalue = 0; // each thread computes one element of the block sub-matrix for (int k = 0; k < Width; ++k) { Pvalue += M[Row*Width+k]*N[k*Width+Col]; } P[Row*Width+Col] = Pvalue; } }

HOW ABOUT PERFORMANCE ON A GPU CGMA ratio (Compute to Global Memory Access ratio):The number of floating-point calculations performed for each access to the global memory within a region of a CUDA kernel. The bigger,the better. What is the CGMA of Basic Matrix Multiplication? 电子料战女学 Universityof Electri Science and Tachnolopf China O

▪ CGMA ratio(Compute to Global Memory Access ratio):The number of floating-point calculations performed for each access to the global memory within a region of a CUDA kernel. ▪ The bigger, the better. What is the CGMA of Basic Matrix Multiplication?

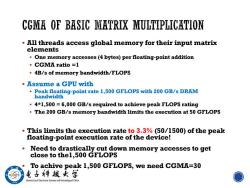

CGMA OF BASIC MATRIX MULTIPLICATION All threads access global memory for their input matrix elements .One memory accesses(4 bytes)per floating-point addition ·CGMA ratio=l 4B/s of memory bandwidth/FLOPS .Assume a GPU with Peak floating-point rate 1,500 GFLOPS with 200 GB/s DRAM bandwidth 4*1,500=6,000 GB/s required to achieve peak FLOPS rating The 200 GB/s memory bandwidth limits the execution at 50 GFLOPS This limits the execution rate to 3.3%(50/1500)of the peak floating-point execution rate of the device! Need to drastically cut down memory accesses to get close to thel,500 GFLOPS To achive peak 1,500 GFLOPS,we need CGMA=30 U 电子件点女学 University of Electreaie Science and Technolory of China

▪ All threads access global memory for their input matrix elements ▪ One memory accesses (4 bytes) per floating-point addition ▪ CGMA ratio =1 ▪ 4B/s of memory bandwidth/FLOPS ▪ Assume a GPU with ▪ Peak floating-point rate 1,500 GFLOPS with 200 GB/s DRAM bandwidth ▪ 4*1,500 = 6,000 GB/s required to achieve peak FLOPS rating ▪ The 200 GB/s memory bandwidth limits the execution at 50 GFLOPS ▪ This limits the execution rate to 3.3% (50/1500) of the peak floating-point execution rate of the device! ▪ Need to drastically cut down memory accesses to get close to the1,500 GFLOPS ▪ To achive peak 1,500 GFLOPS, we need CGMA=30

QUESTION How to improve memory access efficiency? .To increase calculation .To improve utilization To utilize the memory hierarchy .Global memory .Shared memory .Register file 电子料发女学 Universityof Electrcience and TachnolopChina O

▪How to improve memory access efficiency? ▪ To increase calculation ▪ To improve utilization ▪ To utilize the memory hierarchy ▪ Global memory ▪ Shared memory ▪ Register file

MEMORY AND REGISTERS IN THE VON-NEUMANN MODEL Memory 1/0 Processing Unit ALU Reg File Control Unit PC IR 电子料做女学 University of ElectriScience and TachnolopfChina 0

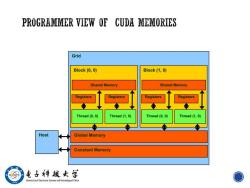

PROGRAMMER VIEW OF CUDA MEMORIES Grid Block (0,0) B1ock(1,0) Shared Memory Shared Memory Registers Registers Registers Registers Thread(0,0) Thread(1,0) Thread (0,0) Thread(1,0) Host Global Memory Constant Memory 电子料战女学 Universityf Electr Science and TachnoloChina O

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 02 CUDA PARALLELISM MODEL.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 01 Introduction To Cuda C.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)NVIDIA CUDA C Programming Guide(Design Guide,June 2017).pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Methods of conjugate gradients for solving linear systems.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)NVIDIA Parallel Prefix Sum(Scan)with CUDA(April 2007).pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Single-pass Parallel Prefix Scan with Decoupled Look-back.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Program Optimization Space Pruning for a Multithreaded GPU.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Optimization Principles and Application Performance Evaluation of a Multithreaded GPU Using CUDA.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Some Computer Organizations and Their Effectiveness.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Software and the Concurrency Revolution.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)An Asymmetric Distributed Shared Memory Model for Heterogeneous Parallel Systems.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)MPI A Message-Passing Interface Standard(Version 2.2).pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)19 Firewall Design Methods.pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)18 Web Security(SQL Injection and Cross-Site Request Forgery).pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)17 Web Security(Cookies and Cross Site Scripting,XSS).pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)16 Bloom Filter for Network Security.pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)15 Bloom Filters and its Variants.pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)14 Buffer Overflow Attacks.pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)13 Human Authentication.pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)12 Secure Socket Layer(SSL)、TLS(Transport Layer Security).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 04 Performance considerations.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 05 PARALLEL COMPUTATION PATTERNS(HISTOGRAM).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 06 PARALLEL COMPUTATION PATTERNS(SCAN).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 07 JOINT CUDA-MPI PROGRAMMING.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 08 Parallel Sparse Methods.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 09 Parallel patterns(MERGE SORT).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 10 Computational Thinking.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)课程简介(杜平安).pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第一章 绪论.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二章 有限元法的基本原理(平面问题有限元法).pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第七章 动态分析有限元法 FEM of Dynamic Analysis.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第3~6章 其他问题有限元法.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第八章 热分析有限元法 FEM of Thermal Analysis.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十二章 有限元建模概述 Overview of Finite Element Modeling.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十一章 有限元建模的基本原则.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十四章 几何模型的建立.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十五章 单元类型及特性定义.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十六章 网格划分方法.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十七章 模型检查与处理 Model Checking and Processing.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十八章 边界条件的建立 Creation of Boundary Condition.pdf