电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 10 Computational Thinking

® nVIDIA. GPU Teaching Kit Accelerated Computing ILLINOIS UN VERSITY OF ILLINUS AT URBANA-LHANPWGN Module 11-Computational Thinking

Accelerated Computing GPU Teaching Kit Module 11 – Computational Thinking

Objective To provide you with a framework for further studies on Thinking about the problems of parallel programming Discussing your work with others Approaching complex parallel programming problems Using or building useful tools and environments 2 ②nVIDIA/ ILLINOIS

2 Objective – To provide you with a framework for further studies on – Thinking about the problems of parallel programming – Discussing your work with others – Approaching complex parallel programming problems – Using or building useful tools and environments

Fundamentals of Parallel Computing Parallel computing requires that The problem can be decomposed into sub-problems that can be safely solved at the same time The programmer structures the code and data to solve these sub-problems concurrently The goals of parallel computing are To solve problems in less time (strong scaling),and/or To solve bigger problems (weak scaling),and/or To achieve better solutions (advancing science) The problems must be large enough to justify parallel computing and to exhibit exploitable concurrency. 3 ②nVIDIA ■tuNo5

3 Fundamentals of Parallel Computing – Parallel computing requires that – The problem can be decomposed into sub-problems that can be safely solved at the same time – The programmer structures the code and data to solve these sub-problems concurrently – The goals of parallel computing are – To solve problems in less time (strong scaling), and/or – To solve bigger problems (weak scaling), and/or – To achieve better solutions (advancing science) The problems must be large enough to justify parallel computing and to exhibit exploitable concurrency

Shared Memory vs.Message Passing We have focused on shared memory parallel programming This is what CUDA(and OpenMP,OpenCL)is based on Future massively parallel microprocessors are expected to support shared memory at the chip level The programming considerations of message passing model is quite different! However,you will find parallels for almost every technique you learned in this course Need to be aware of space-time constraints ②nVIDIA ILLINOIS

4 Shared Memory vs. Message Passing – We have focused on shared memory parallel programming – This is what CUDA (and OpenMP, OpenCL) is based on – Future massively parallel microprocessors are expected to support shared memory at the chip level – The programming considerations of message passing model is quite different! – However, you will find parallels for almost every technique you learned in this course – Need to be aware of space-time constraints

Data Sharing Data sharing can be a double-edged sword Excessive data sharing drastically reduces advantage of parallel execution Localized sharing can improve memory bandwidth efficiency Efficient memory bandwidth usage can be achieved by synchronizing the execution of task groups and coordinating their usage of memory data Efficient use of on-chip,shared storage and datapaths Read-only sharing can usually be done at much higher efficiency than read-write sharing,which often requires more synchronization - Many:Many,One:Many,Many:One,One:One 5 ②nVIDIA ILLINOIS

5 Data Sharing – Data sharing can be a double-edged sword – Excessive data sharing drastically reduces advantage of parallel execution – Localized sharing can improve memory bandwidth efficiency – Efficient memory bandwidth usage can be achieved by synchronizing the execution of task groups and coordinating their usage of memory data – Efficient use of on-chip, shared storage and datapaths – Read-only sharing can usually be done at much higher efficiency than read-write sharing, which often requires more synchronization – Many:Many, One:Many, Many:One, One:One

Synchronization Synchronization =Control Sharing Barriers make threads wait until all threads catch up Waiting is lost opportunity for work Atomic operations may reduce waiting Watch out for serialization Important:be aware of which items of work are truly independent 6 ②nVIDIA/ILLINOIS

6 Synchronization – Synchronization == Control Sharing – Barriers make threads wait until all threads catch up – Waiting is lost opportunity for work – Atomic operations may reduce waiting – Watch out for serialization – Important: be aware of which items of work are truly independent 6

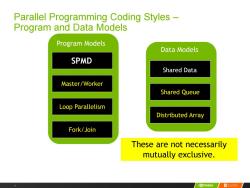

Parallel Programming Coding Styles- Program and Data Models Program Models Data Models SPMD Shared Data Master/Worker Shared Queue Loop Parallelism Distributed Array Fork/Join These are not necessarily mutually exclusive. 7 ②nVIDIA ■uNo5

7 Parallel Programming Coding Styles – Program and Data Models Fork/Join Master/Worker SPMD Program Models Loop Parallelism Distributed Array Shared Queue Shared Data Data Models These are not necessarily mutually exclusive

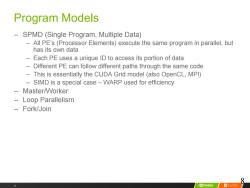

Program Models SPMD (Single Program,Multiple Data) All PE's (Processor Elements)execute the same program in parallel,but has its own data Each PE uses a unique ID to access its portion of data - Different PE can follow different paths through the same code This is essentially the CUDA Grid model (also OpenCL,MPI) SIMD is a special case-WARP used for efficiency -Master/Worker Loop Parallelism -Fork/Join 8 8 ②nVIDIA/ILLINOIS

8 Program Models – SPMD (Single Program, Multiple Data) – All PE’s (Processor Elements) execute the same program in parallel, but has its own data – Each PE uses a unique ID to access its portion of data – Different PE can follow different paths through the same code – This is essentially the CUDA Grid model (also OpenCL, MPI) – SIMD is a special case – WARP used for efficiency – Master/Worker – Loop Parallelism – Fork/Join 8

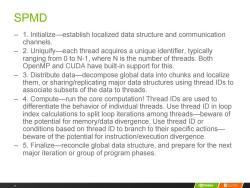

SPMD 1.Initialize-establish localized data structure and communication channels. 2.Uniquify-each thread acquires a unique identifier,typically ranging from 0 to N-1,where N is the number of threads.Both OpenMP and CUDA have built-in support for this. 3.Distribute data-decompose global data into chunks and localize them,or sharing/replicating major data structures using thread IDs to associate subsets of the data to threads. 4.Compute-run the core computation!Thread IDs are used to differentiate the behavior of individual threads.Use thread ID in loop index calculations to split loop iterations among threads-beware of the potential for memory/data divergence.Use thread ID or conditions based on thread ID to branch to their specific actions- beware of the potential for instruction/execution divergence 一 5.Finalize-reconcile global data structure,and prepare for the next major iteration or group of program phases. 9 ②nVIDIA ILLINOIS

9 SPMD – 1. Initialize—establish localized data structure and communication channels. – 2. Uniquify—each thread acquires a unique identifier, typically ranging from 0 to N-1, where N is the number of threads. Both OpenMP and CUDA have built-in support for this. – 3. Distribute data—decompose global data into chunks and localize them, or sharing/replicating major data structures using thread IDs to associate subsets of the data to threads. – 4. Compute—run the core computation! Thread IDs are used to differentiate the behavior of individual threads. Use thread ID in loop index calculations to split loop iterations among threads—beware of the potential for memory/data divergence. Use thread ID or conditions based on thread ID to branch to their specific actions— beware of the potential for instruction/execution divergence. – 5. Finalize—reconcile global data structure, and prepare for the next major iteration or group of program phases

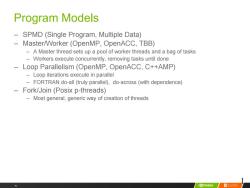

Program Models SPMD(Single Program,Multiple Data) Master/Worker (OpenMP,OpenACC,TBB) -A Master thread sets up a pool of worker threads and a bag of tasks Workers execute concurrently,removing tasks until done 一 Loop Parallelism (OpenMP,OpenACC,C++AMP) Loop iterations execute in parallel FORTRAN do-all (truly parallel),do-across (with dependence) Fork/Join (Posix p-threads) Most general,generic way of creation of threads 10 /②nVIDIA ILLINOIS

10 Program Models – SPMD (Single Program, Multiple Data) – Master/Worker (OpenMP, OpenACC, TBB) – A Master thread sets up a pool of worker threads and a bag of tasks – Workers execute concurrently, removing tasks until done – Loop Parallelism (OpenMP, OpenACC, C++AMP) – Loop iterations execute in parallel – FORTRAN do-all (truly parallel), do-across (with dependence) – Fork/Join (Posix p-threads) – Most general, generic way of creation of threads 1 0

按次数下载不扣除下载券;

注册用户24小时内重复下载只扣除一次;

顺序:VIP每日次数-->可用次数-->下载券;

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 09 Parallel patterns(MERGE SORT).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 08 Parallel Sparse Methods.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 07 JOINT CUDA-MPI PROGRAMMING.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 06 PARALLEL COMPUTATION PATTERNS(SCAN).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 05 PARALLEL COMPUTATION PATTERNS(HISTOGRAM).pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 04 Performance considerations.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 03 MEMORY AND DATA LOCALITY.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 02 CUDA PARALLELISM MODEL.pdf

- 电子科技大学:《GPU并行编程 GPU Parallel Programming》课程教学资源(课件讲稿)Lecture 01 Introduction To Cuda C.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)NVIDIA CUDA C Programming Guide(Design Guide,June 2017).pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Methods of conjugate gradients for solving linear systems.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)NVIDIA Parallel Prefix Sum(Scan)with CUDA(April 2007).pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Single-pass Parallel Prefix Scan with Decoupled Look-back.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Program Optimization Space Pruning for a Multithreaded GPU.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Optimization Principles and Application Performance Evaluation of a Multithreaded GPU Using CUDA.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Some Computer Organizations and Their Effectiveness.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)Software and the Concurrency Revolution.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)An Asymmetric Distributed Shared Memory Model for Heterogeneous Parallel Systems.pdf

- 《GPU并行编程 GPU Parallel Programming》课程教学资源(参考文献)MPI A Message-Passing Interface Standard(Version 2.2).pdf

- 南京大学:《网络安全与入侵检测 Network Security and Intrusion Detection》课程教学资源(课件讲稿)19 Firewall Design Methods.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)课程简介(杜平安).pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第一章 绪论.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二章 有限元法的基本原理(平面问题有限元法).pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第七章 动态分析有限元法 FEM of Dynamic Analysis.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第3~6章 其他问题有限元法.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第八章 热分析有限元法 FEM of Thermal Analysis.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十二章 有限元建模概述 Overview of Finite Element Modeling.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十一章 有限元建模的基本原则.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十四章 几何模型的建立.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十五章 单元类型及特性定义.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十六章 网格划分方法.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十七章 模型检查与处理 Model Checking and Processing.pdf

- 电子科技大学:《有限元理论与建模方法 Finite Element Analysis and Modeling》研究生课程教学资源(课件讲稿)第二篇 有限元建模方法 第十八章 边界条件的建立 Creation of Boundary Condition.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Fingerprinting.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Greedy and Local Search.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Balls into Bins.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Concentration of Measure.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Introduction(Min-Cut and Max-Cut,尹⼀通).pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Fingerprinting.pdf

- 南京大学:《高级算法 Advanced Algorithms》课程教学资源(课件讲稿)Greedy and Local Search.pdf